# BlinkDL

**Repository Path**: RedCodeX/BlinkDL

## Basic Information

- **Project Name**: BlinkDL

- **Description**: 彭博写的BlinkDL深度卷积网络运行库 策略网络

- **Primary Language**: Unknown

- **License**: Apache-2.0

- **Default Branch**: master

- **Homepage**: None

- **GVP Project**: No

## Statistics

- **Stars**: 0

- **Forks**: 0

- **Created**: 2020-09-09

- **Last Updated**: 2020-12-19

## Categories & Tags

**Categories**: Uncategorized

**Tags**: None

## README

# BlinkDL

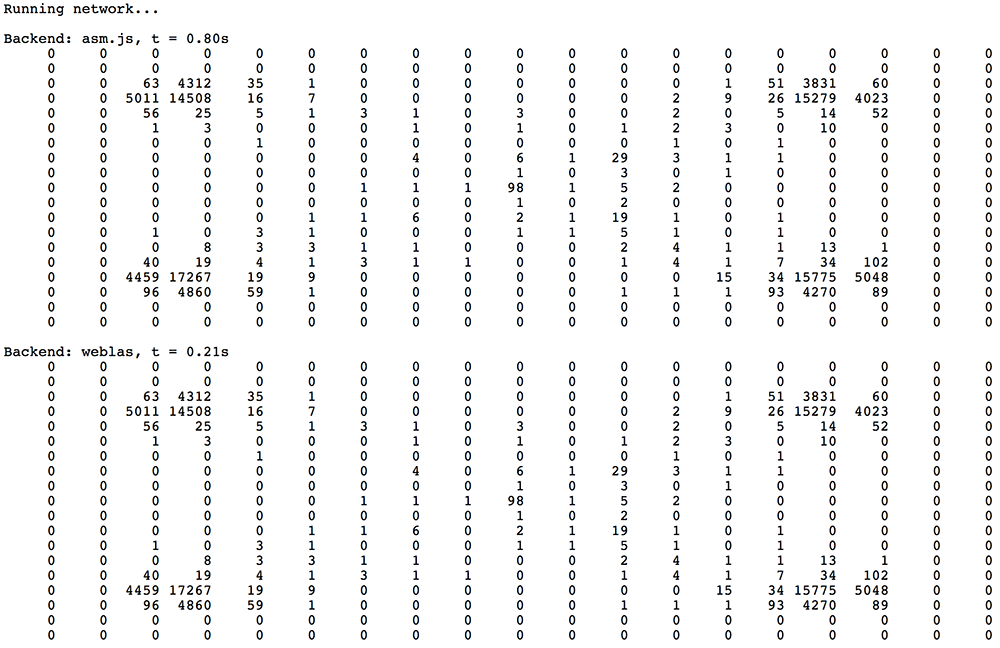

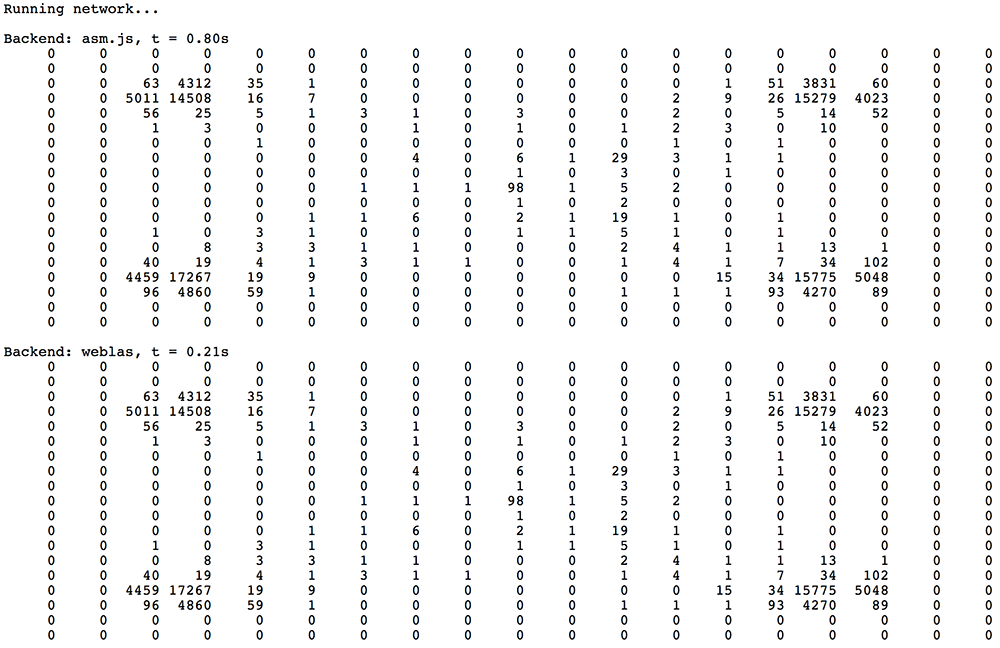

A minimalist deep learning library in Javascript using WebGL + asm.js. Runs in your browser.

Currently it is a proof-of-concept (inference only). Note: Convolution is buggy when memories overlap.

The WebGL backend is powered by weblas: https://github.com/waylonflinn/weblas.

## Example

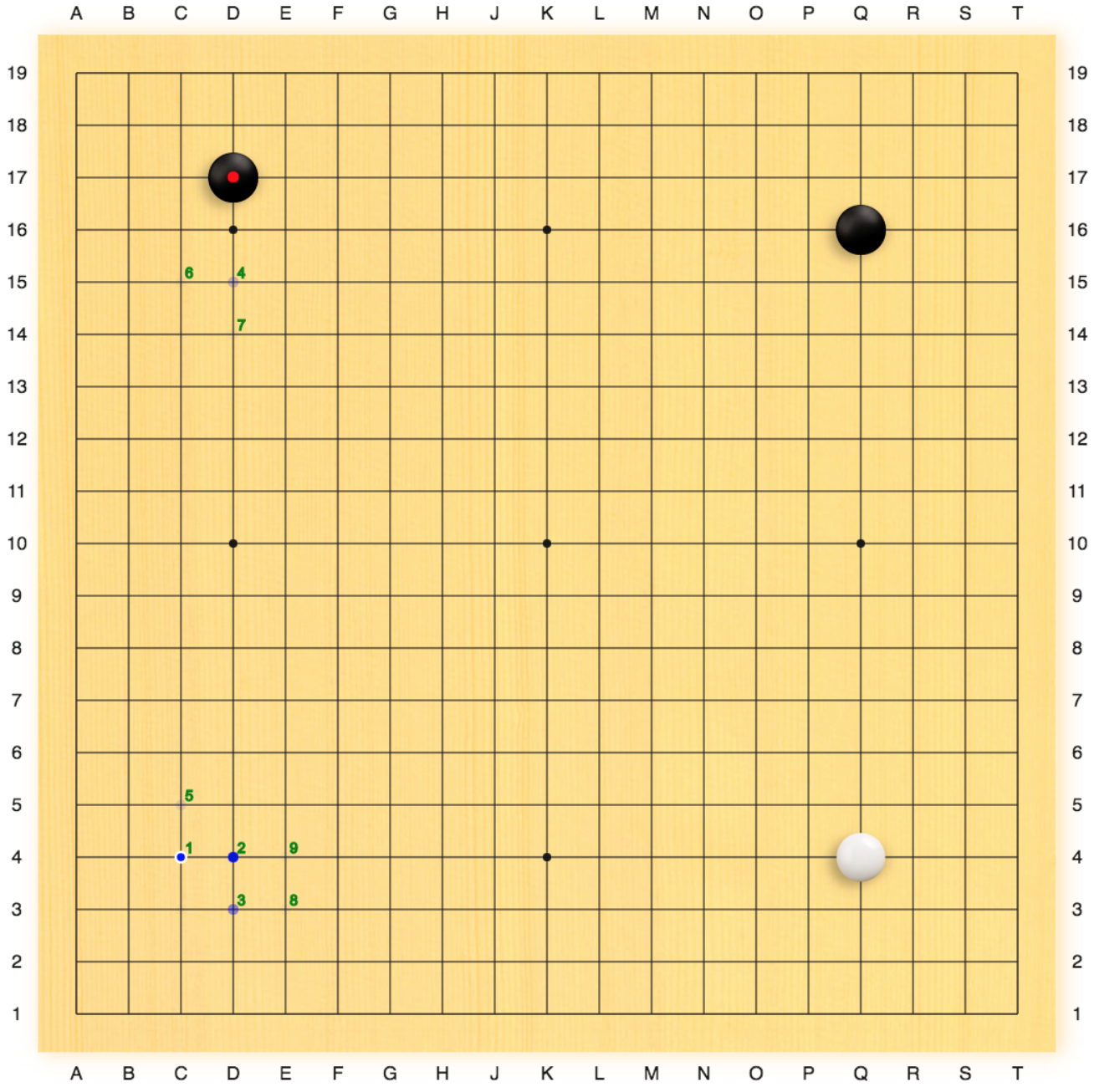

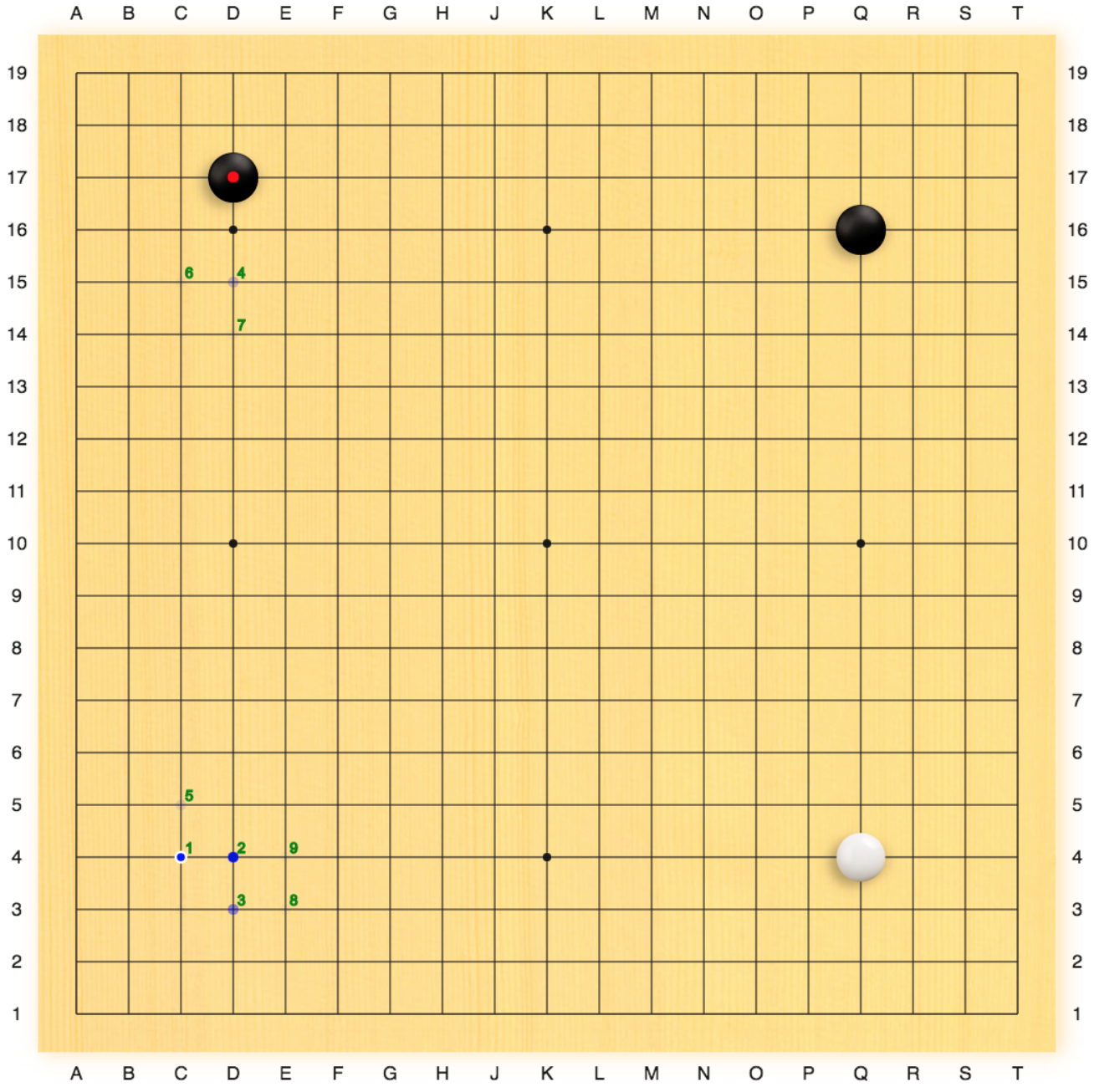

https://withablink.coding.me/goPolicyNet/ : a weiqi (baduk, go) policy network in AlphaGo style:

const N = 19;

const NN = N * N;

const nFeaturePlane = 8;

const nFilter = 128;

const x = new BlinkArray();

x.Init('weblas');

x.nChannel = nFeaturePlane;

x.data = new Float32Array(nFeaturePlane * NN);

for (var i = 0; i < NN; i++)

x.data[5 * NN + i] = 1; // set feature plane for empty board

// pre-act residual network with 6 residual blocks

const bak = new Float32Array(nFilter * NN);

x.Convolution(nFilter, 3);

x.CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak);

x.BatchNorm().ReLU().Convolution(1, 1).Softmax();

const N = 19;

const NN = N * N;

const nFeaturePlane = 8;

const nFilter = 128;

const x = new BlinkArray();

x.Init('weblas');

x.nChannel = nFeaturePlane;

x.data = new Float32Array(nFeaturePlane * NN);

for (var i = 0; i < NN; i++)

x.data[5 * NN + i] = 1; // set feature plane for empty board

// pre-act residual network with 6 residual blocks

const bak = new Float32Array(nFilter * NN);

x.Convolution(nFilter, 3);

x.CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak);

x.BatchNorm().ReLU().Convolution(1, 1).Softmax();

## Usage

## Todo

- [x] Convolution (3x3_pad_1 and 1x1), BatchNorm, ReLU, Softmax

- [ ] Pooling layer

- [ ] FC layer

- [ ] Strided convolution

- [ ] Transposed convolution

- [ ] Webworker and async

- [ ] Faster inference with weblas pipeline, WebGPU, WebAssembly

- [ ] Memory manager

- [ ] Training

## Usage

## Todo

- [x] Convolution (3x3_pad_1 and 1x1), BatchNorm, ReLU, Softmax

- [ ] Pooling layer

- [ ] FC layer

- [ ] Strided convolution

- [ ] Transposed convolution

- [ ] Webworker and async

- [ ] Faster inference with weblas pipeline, WebGPU, WebAssembly

- [ ] Memory manager

- [ ] Training const N = 19;

const NN = N * N;

const nFeaturePlane = 8;

const nFilter = 128;

const x = new BlinkArray();

x.Init('weblas');

x.nChannel = nFeaturePlane;

x.data = new Float32Array(nFeaturePlane * NN);

for (var i = 0; i < NN; i++)

x.data[5 * NN + i] = 1; // set feature plane for empty board

// pre-act residual network with 6 residual blocks

const bak = new Float32Array(nFilter * NN);

x.Convolution(nFilter, 3);

x.CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak);

x.BatchNorm().ReLU().Convolution(1, 1).Softmax();

const N = 19;

const NN = N * N;

const nFeaturePlane = 8;

const nFilter = 128;

const x = new BlinkArray();

x.Init('weblas');

x.nChannel = nFeaturePlane;

x.data = new Float32Array(nFeaturePlane * NN);

for (var i = 0; i < NN; i++)

x.data[5 * NN + i] = 1; // set feature plane for empty board

// pre-act residual network with 6 residual blocks

const bak = new Float32Array(nFilter * NN);

x.Convolution(nFilter, 3);

x.CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak).CopyTo(bak);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.BatchNorm().ReLU().Convolution(nFilter, 3);

x.Add(bak);

x.BatchNorm().ReLU().Convolution(1, 1).Softmax();

## Usage

## Todo

- [x] Convolution (3x3_pad_1 and 1x1), BatchNorm, ReLU, Softmax

- [ ] Pooling layer

- [ ] FC layer

- [ ] Strided convolution

- [ ] Transposed convolution

- [ ] Webworker and async

- [ ] Faster inference with weblas pipeline, WebGPU, WebAssembly

- [ ] Memory manager

- [ ] Training

## Usage

## Todo

- [x] Convolution (3x3_pad_1 and 1x1), BatchNorm, ReLU, Softmax

- [ ] Pooling layer

- [ ] FC layer

- [ ] Strided convolution

- [ ] Transposed convolution

- [ ] Webworker and async

- [ ] Faster inference with weblas pipeline, WebGPU, WebAssembly

- [ ] Memory manager

- [ ] Training