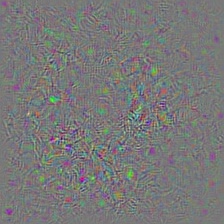

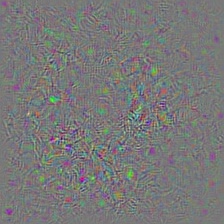

(GB)

(GB)

(GB)

(GB)

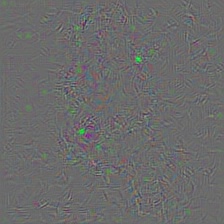

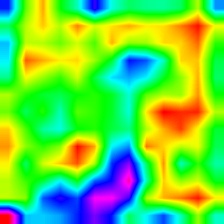

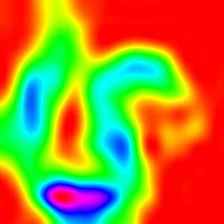

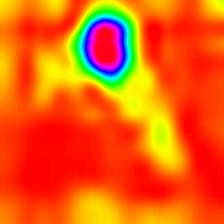

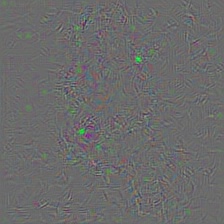

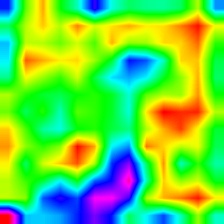

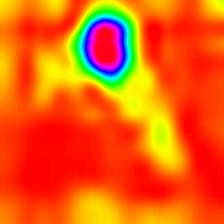

(Grad-CAM)

(Grad-CAM)

(Grad-CAM)

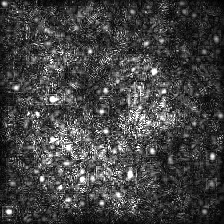

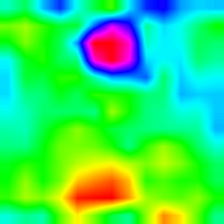

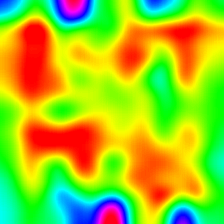

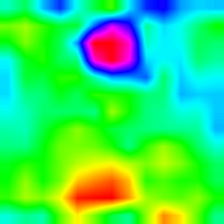

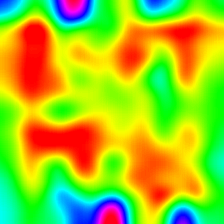

(Score-CAM)

(Score-CAM)

(Score-CAM)

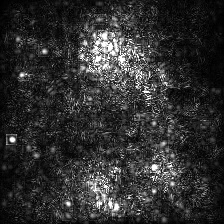

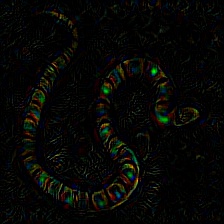

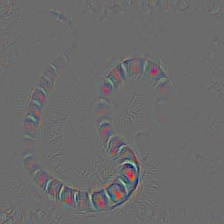

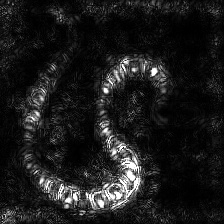

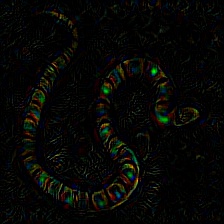

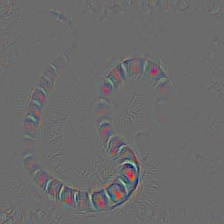

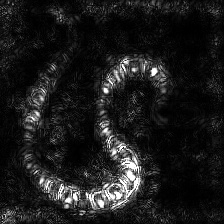

(Guided-Grad-CAM)

(Guided-Grad-CAM)

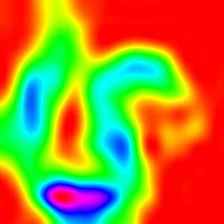

(without image multiplication)

| Target class: King Snake (56) | Target class: Mastiff (243) | Target class: Spider (72) | |

| Original Image |  |

|

|

| Colored Vanilla Backpropagation |  |

|

|

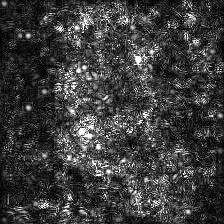

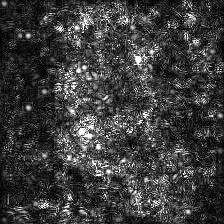

Vanilla Backpropagation Saliency |  |

|

|

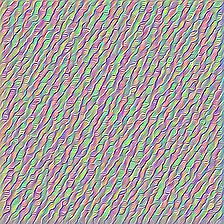

| Colored Guided Backpropagation (GB) |

|

|

|

| Guided Backpropagation Saliency (GB) |

|

|

|

| Guided Backpropagation Negative Saliency (GB) |

|

|

|

| Guided Backpropagation Positive Saliency (GB) |

|

|

|

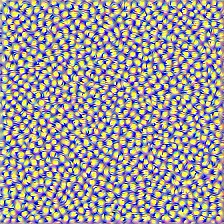

| Gradient-weighted Class Activation Map (Grad-CAM) |

|

|

|

| Gradient-weighted Class Activation Heatmap (Grad-CAM) |

|

|

|

| Gradient-weighted Class Activation Heatmap on Image (Grad-CAM) |

|

|

|

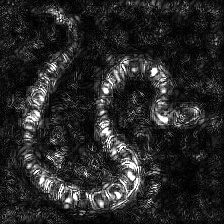

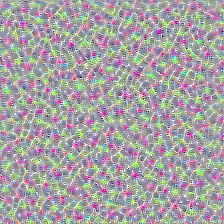

| Score-weighted Class Activation Map (Score-CAM) |

|

|

|

| Score-weighted Class Activation Heatmap (Score-CAM) |

|

|

|

| Score-weighted Class Activation Heatmap on Image (Score-CAM) |

|

|

|

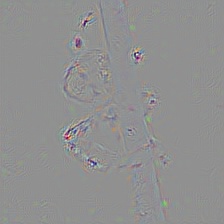

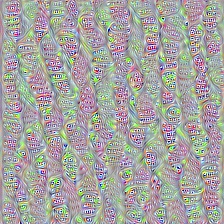

| Colored Guided Gradient-weighted Class Activation Map (Guided-Grad-CAM) |

|

|

|

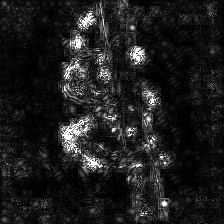

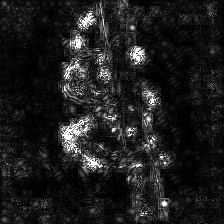

| Guided Gradient-weighted Class Activation Map Saliency (Guided-Grad-CAM) |

|

|

|

| Integrated Gradients (without image multiplication) |

|

|

|

| Class Activation Map | Class Activation HeatMap | Class Activation HeatMap on Image | |

| LayerCAM (Layer 9) |

|

|

|

| LayerCAM (Layer 16) |

|

|

|

| LayerCAM (Layer 23) |

|

|

|

| LayerCAM (Layer 30) |

|

|

|

| Vanilla Grad X Image |

|

|

|

| Guided Grad X Image |

|

|

|

| Integrated Grad X Image |

|

|

|

| Vanilla Backprop | ||

|

|

|

| Guided Backprop | ||

|

|

|

| Layer 2 (Conv 1-2) |

|

|

|

| Layer 10 (Conv 2-1) |

|

|

|

| Layer 17 (Conv 3-1) |

|

|

|

| Layer 24 (Conv 4-1) |

|

|

|

| Input Image | Layer Vis. (Filter=0) | Filter Vis. (Layer=29) |

|

|

|

| Layer 0: Conv2d | Layer 2: MaxPool2d | Layer 4: ReLU |

|

|

|

| Layer 7: ReLU | Layer 9: ReLU | Layer 12: MaxPool2d |

|

|

|

| Original Image |  |

| VGG19 Layer: 34 (Final Conv. Layer) Filter: 94 |

|

| VGG19 Layer: 34 (Final Conv. Layer) Filter: 103 |

|

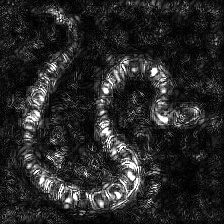

| Target class: Worm Snake (52) - (VGG19) | Target class: Spider (72) - (VGG19) |

|

|

| No Regularization | L1 Regularization | L2 Regularization |

|

|

|