# kubernetes-demo

**Repository Path**: dxh-gitee/kubernetes-demo

## Basic Information

- **Project Name**: kubernetes-demo

- **Description**: k8s学习笔记

- **Primary Language**: YAML

- **License**: AGPL-3.0

- **Default Branch**: master

- **Homepage**: None

- **GVP Project**: No

## Statistics

- **Stars**: 0

- **Forks**: 1

- **Created**: 2023-02-22

- **Last Updated**: 2023-02-22

## Categories & Tags

**Categories**: Uncategorized

**Tags**: None

## README

---

title: Kubernetes 管理员认证(CKA)考试笔记

tags:

- Kubernetes

- CKA

categories:

- Kubernetes

toc: true

recommend: 1

keywords: Kubernetes

uniqueId: '2021-09-21 15:25:14/Kubernetes 管理员认证(CKA)考试笔记.html'

mathJax: false

date: 2021-09-21 23:25:14

thumbnail: https://img-blog.csdnimg.cn/e8648321408648f1b3aedd7b342356cb.png

---

** 生活的意义就是学着真实的活下去,生命的意义就是寻找生活的意义 -----山河已无恙**

## 写在前面

***

+ 嗯,准备考 `cka`证书,报了个班,好心疼,一定要考过去。

+ 这篇博客是报班听课后整理的笔记,适合温习。

+ 博客内容涉及 docker k8s;

** 生活的意义就是学着真实的活下去,生命的意义就是寻找生活的意义 -----山河已无恙**

***

# 一、docker 基础

## 1、容器 ?= docker

**容器是什么?docker是什么? ** `启动盘`小伙伴都不陌生,电脑系统坏了,开不了机,我们插一个`启动盘`就可以了,这个`启动盘里有一些基础的软件`,那么这里,**我们用的启动盘,就可以理解是一个类似镜像的东东**,这个启动盘在电脑上运行一个系统,**这个win PE系统就是一个容器**,这个系统运行需要的物理内存CPU都是从物理机获取,也就是我们开不了机的那个电脑。

那现实场景中,我们要多管理容器和镜像,要怎么办,不能一个镜像放到一个U盘里吧,这里我们 **需要一个 runtime(运行时),即用于管理容器的一种软件**,比如 `runc lxc gvisor kata `这些,只能管理容器,不能管理镜像,他们被称为 **低级别运行时**。

低级别的运行时功能单一,不能管理镜像,这时候需要有 **高级别的运行时**,比如 `docker podman containerd .. `,用来调用管理低级别运行时 runc 等,即能管理容器,也能管理镜像。**k8s是用来管理高级别运行时的。**

**关闭屏保**

```bash

setterm -blank 0

```

**配置yum源**

```bash

rm -rf /etc/yum.repos.d/

wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

```

**配置docker加速器**

```bash

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://2tefyfv7.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

```

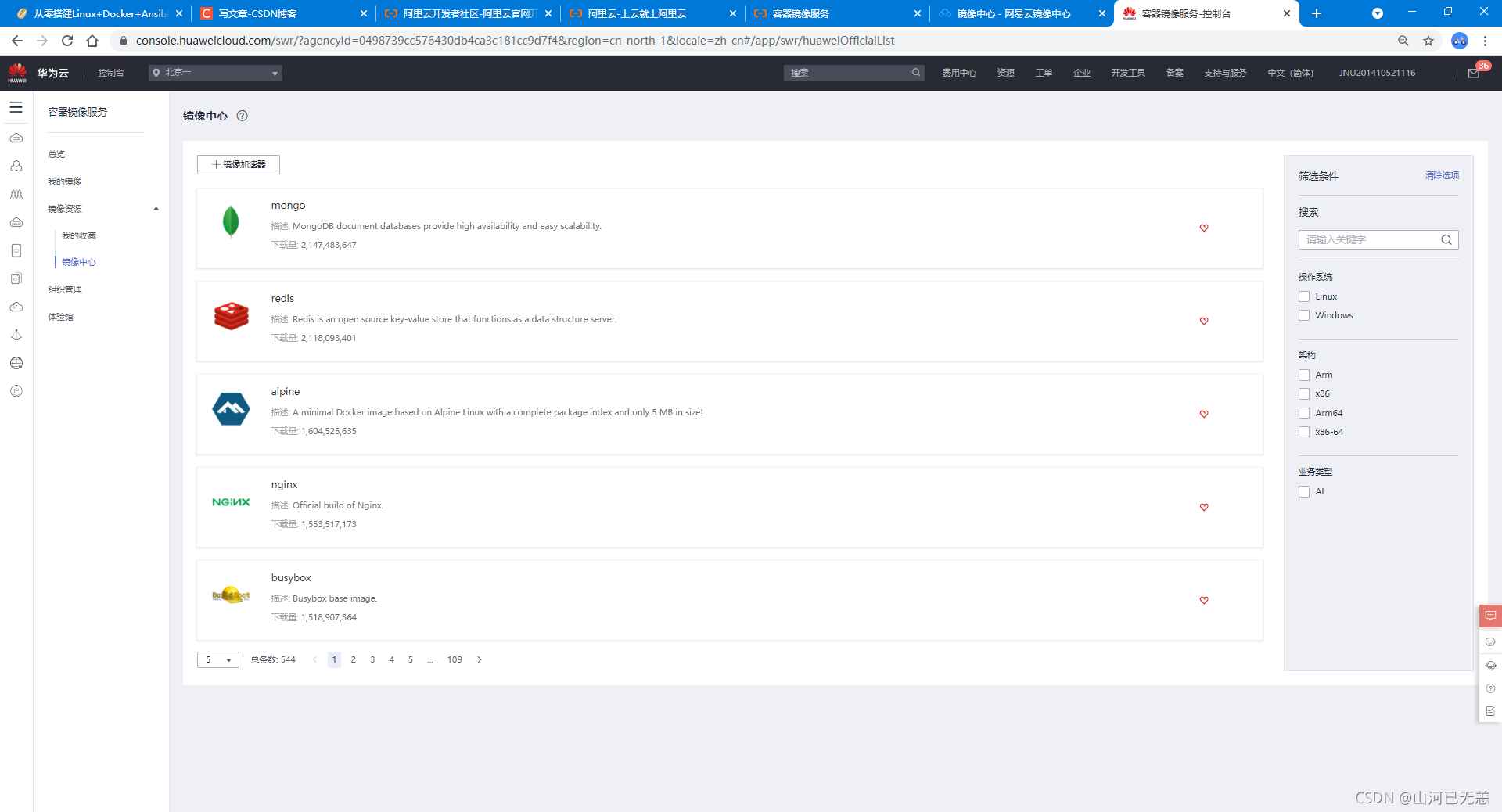

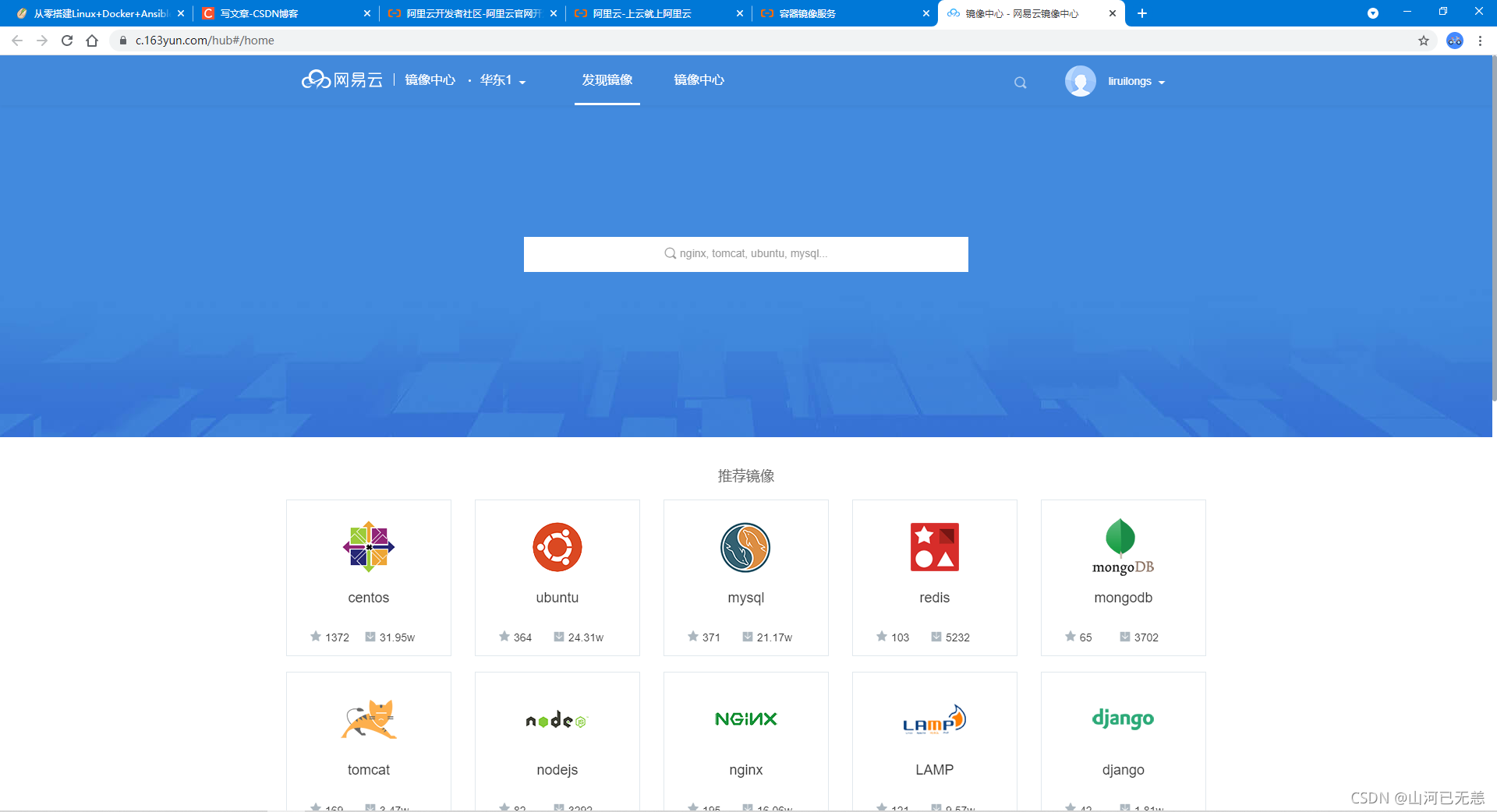

### 使用国内仓库

|||

|--|--|

|**[华为云](https://console.huaweicloud.com/swr/?region=cn-north-1&locale=zh-cn#/app/swr/huaweiOfficialList)**||

|**[网易云](https://c.163yun.com/hub#/home)**||

|**[阿里云](https://cr.console.aliyun.com/cn-beijing/instances/images)**||

## 2.docker镜像管理

```bash

┌──(liruilong㉿Liruilong)-[/mnt/c/Users/lenovo]

└─$ ssh root@192.168.26.55

Last login: Fri Oct 1 16:39:16 2021 from 192.168.26.1

┌──[root@liruilongs.github.io]-[~]

└─$ systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2021-09-26 02:07:56 CST; 1 weeks 0 days ago

Docs: https://docs.docker.com

Main PID: 1004 (dockerd)

Memory: 136.1M

CGroup: /system.slice/docker.service

└─1004 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

。。。。。。。

┌──[root@liruilongs.github.io]-[~]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

┌──[root@liruilongs.github.io]-[~]

└─$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

┌──[root@liruilongs.github.io]-[~]

└─$

```

|docker镜像管理||

|--|--|

|镜像的命名方式|**默认docker.io**,|

|docker pull 镜像|**拉镜像**|

|docker tag 镜像|**打标签,重命名,类似于linxu里的硬连接**|

|docker rmi 镜像|**删除**|

|docker save 镜像名 > filename.tar|**保存,备份**|

|docker load -i filename.tar|**导入**|

|docker export 容器名 > filename.tar|**把容器导出为镜像**: |

|导入 cat filename.tar | docker import - 镜像名|

|docker history xxxx --no-trunc |可以显示完整的构建内容|

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker images | grep -v TAG | awk '{print $1":"$2}'

nginx:latest

mysql:latest

```

**备份所有镜像**

`docker images | grep -v TAG | awk '{print $1":"$2}' | xargs docker save >all.tar`

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker images | grep -v TAG | awk '{print $1":"$2}' | xargs docker save >all.tar

┌──[root@liruilongs.github.io]-[~/docker]

└─$ ls

all.tar docker_images_util_202110032229_UCPY4C5k.sh

```

**删除所有镜像**

`docker images | grep -v TAG | awk '{print $1":"$2}' | xargs docker rmi`

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest f8f4ffc8092c 5 days ago 133MB

mysql latest 2fe463762680 5 days ago 514MB

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker images | grep -v TAG | awk '{print $1":"$2}' | xargs docker rmi

Untagged: nginx:latest

Untagged: nginx@sha256:765e51caa9e739220d59c7f7a75508e77361b441dccf128483b7f5cce8306652

Deleted: sha256:f8f4ffc8092c956ddd6a3a64814f36882798065799b8aedeebedf2855af3395b

Deleted: sha256:f208904eecb00a0769d263e81b8234f741519fefa262482d106c321ddc9773df

Deleted: sha256:ed6dd2b44338215d30a589d7d36cb4ffd05eb28d2e663a23108d03b3ac273d27

Deleted: sha256:c9958d4f33715556566095ccc716e49175a1fded2fa759dbd747750a89453490

Deleted: sha256:c47815d475f74f82afb68ef7347b036957e7e1a1b0d71c300bdb4f5975163d6a

Deleted: sha256:3b06b30cf952c2f24b6eabdff61b633aa03e1367f1ace996260fc3e236991eec

Untagged: mysql:latest

Untagged: mysql@sha256:4fcf5df6c46c80db19675a5c067e737c1bc8b0e78e94e816a778ae2c6577213d

Deleted: sha256:2fe4637626805dc6df98d3dc17fa9b5035802dcbd3832ead172e3145cd7c07c2

Deleted: sha256:e00bdaa10222919253848d65585d53278a2f494ce8c6a445e5af0ebfe239b3b5

Deleted: sha256:83411745a5928b2a3c2b6510363218fb390329f824e04bab13573e7a752afd50

Deleted: sha256:e8e521a71a92aad623b250b0a192a22d54ad8bbeb943f7111026041dce20d94f

Deleted: sha256:024ee0ef78b28663bc07df401ae3a258ae012bd5f37c2960cf638ab4bc04fafd

Deleted: sha256:597139ec344c8cb622127618ae21345b96dd23e36b5d04b071a3fd92d207a2c0

Deleted: sha256:28909b85bd680fc47702edb647a06183ae5f3e3020f44ec0d125bf75936aa923

Deleted: sha256:4e007ef1e2a3e1e0ffb7c0ad8c9ea86d3d3064e360eaa16e7c8e10f514f68339

Deleted: sha256:b01d7bbbd5c0e2e5ae10de108aba7cd2d059bdd890814931f6192c97fc8aa984

Deleted: sha256:d98a368fc2299bfa2c34cc634fa9ca34bf1d035e0cca02e8c9f0a07700f18103

Deleted: sha256:95968d83b58ae5eec87e4c9027baa628d0e24e4acebea5d0f35eb1b957dd4672

Deleted: sha256:425adb901baf7d6686271d2ce9d42b8ca67e53cffa1bc05622fd0226ae40e9d8

Deleted: sha256:476baebdfbf7a68c50e979971fcd47d799d1b194bcf1f03c1c979e9262bcd364

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

┌──[root@liruilongs.github.io]-[~/docker]

```

**导入所有镜像**

`docker load -i all.tar`

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker load -i all.tar

476baebdfbf7: Loading layer 72.53MB/72.53MB

525950111558: Loading layer 64.97MB/64.97MB

0772cb25d5ca: Loading layer 3.072kB/3.072kB

6e109f6c2f99: Loading layer 4.096kB/4.096kB

88891187bdd7: Loading layer 3.584kB/3.584kB

65e1ea1dc98c: Loading layer 7.168kB/7.168kB

Loaded image: nginx:latest

f2f5bad82361: Loading layer 338.4kB/338.4kB

96fe563c6126: Loading layer 9.557MB/9.557MB

44bc6574c36f: Loading layer 4.202MB/4.202MB

e333ff907af7: Loading layer 2.048kB/2.048kB

4cffbf4e4fe3: Loading layer 53.77MB/53.77MB

42417c6d26fc: Loading layer 5.632kB/5.632kB

c786189c417d: Loading layer 3.584kB/3.584kB

2265f824a3a8: Loading layer 378.8MB/378.8MB

6eac57c056e6: Loading layer 5.632kB/5.632kB

92b76bd444bf: Loading layer 17.92kB/17.92kB

0b282e0f658a: Loading layer 1.536kB/1.536kB

Loaded image: mysql:latest

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest f8f4ffc8092c 5 days ago 133MB

mysql latest 2fe463762680 5 days ago 514MB

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

**一个mysql镜像会运行一个 mysql进程, CMD ["mysqld"] **

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker history mysql

IMAGE CREATED CREATED BY SIZE COMMENT

2fe463762680 5 days ago /bin/sh -c #(nop) CMD ["mysqld"] 0B

5 days ago /bin/sh -c #(nop) EXPOSE 3306 33060 0B

5 days ago /bin/sh -c #(nop) ENTRYPOINT ["docker-entry… 0B

5 days ago /bin/sh -c ln -s usr/local/bin/docker-entryp… 34B

5 days ago /bin/sh -c #(nop) COPY file:345a22fe55d3e678… 14.5kB

5 days ago /bin/sh -c #(nop) COPY dir:2e040acc386ebd23b… 1.12kB

5 days ago /bin/sh -c #(nop) VOLUME [/var/lib/mysql] 0B

5 days ago /bin/sh -c { echo mysql-community-server m… 378MB

5 days ago /bin/sh -c echo 'deb http://repo.mysql.com/a… 55B

5 days ago /bin/sh -c #(nop) ENV MYSQL_VERSION=8.0.26-… 0B

5 days ago /bin/sh -c #(nop) ENV MYSQL_MAJOR=8.0 0B

5 days ago /bin/sh -c set -ex; key='A4A9406876FCBD3C45… 1.84kB

5 days ago /bin/sh -c apt-get update && apt-get install… 52.2MB

5 days ago /bin/sh -c mkdir /docker-entrypoint-initdb.d 0B

5 days ago /bin/sh -c set -eux; savedAptMark="$(apt-ma… 4.17MB

5 days ago /bin/sh -c #(nop) ENV GOSU_VERSION=1.12 0B

5 days ago /bin/sh -c apt-get update && apt-get install… 9.34MB

5 days ago /bin/sh -c groupadd -r mysql && useradd -r -… 329kB

5 days ago /bin/sh -c #(nop) CMD ["bash"] 0B

5 days ago /bin/sh -c #(nop) ADD file:99db7cfe7952a1c7a… 69.3MB

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

## 3.docker管理容器

|命令|描述|

|--|--|

|docker run 镜像 |最简单的一个容器|

|docker run -it --rm hub.c.163.com/library/centos /bin/bash|有终端,有交互|

|docker run -dit -h node --name=c1 镜像名 命令|加名字,创建后不进去,进入 --attach,不进入 --detach,守护进程方式|

|docker run -dit --restart=always 镜像名 命令|退出时,容器依然活跃,设置自动重启|

|docker run -it --rm 镜像名 命令|进程结束,删除|

|docker run -dit --restart=always -e 变量1=值1 -e 变量2=值2 镜像|变量传递|

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker pull centos

Using default tag: latest

latest: Pulling from library/centos

a1d0c7532777: Pull complete

Digest: sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

Status: Downloaded newer image for centos:latest

docker.io/library/centos:latest

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -it --name=c1 centos # -t将bash挂载到一个终端上,-i 提供交互的能力

WARNING: IPv4 forwarding is disabled. Networking will not work.

[root@f418f094e0d8 /]# ls

bin etc lib lost+found mnt proc run srv tmp var

dev home lib64 media opt root sbin sys usr

[root@f418f094e0d8 /]# exit

exit

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f418f094e0d8 centos "/bin/bash" 51 seconds ago Exited (0) 4 seconds ago c1

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -it --restart=always --name=c2 centos

WARNING: IPv4 forwarding is disabled. Networking will not work.

[root@ecec30685687 /]# exit

exit

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ecec30685687 centos "/bin/bash" 5 seconds ago Up 1 second c2

f418f094e0d8 centos "/bin/bash" About a minute ago Exited (0) About a minute ago c1

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker rm c1

c1

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker rm c2

Error response from daemon: You cannot remove a running container ecec30685687c9f0af08ea721f6293a3fb635c8290bee3347bb54f11ff3e32fa. Stop the container before attempting removal or force remove

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -itd --restart=always --name=c2 centos

docker: Error response from daemon: Conflict. The container name "/c2" is already in use by container "ecec30685687c9f0af08ea721f6293a3fb635c8290bee3347bb54f11ff3e32fa". You have to remove (or rename) that container to be able to reuse that name.

See 'docker run --help'.

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -itd --restart=always --name=c3 centos

WARNING: IPv4 forwarding is disabled. Networking will not work.

97ffd93370d4e23e6a3d2e6a0c68030d482cabb8ab71b5ceffb4d703de3a6b0c

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

**创建一个mysql容器**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -dit --name=db --restart=always -e MYSQL_ROOT_PASSWORD=liruilong -e MYSQL_DATABASE=blog mysql

WARNING: IPv4 forwarding is disabled. Networking will not work.

0a79be3ed7dbd9bdf19202cda74aa3b3db818bd23deca23248404c673c7e1ff7

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS

PORTS NAMES

0a79be3ed7db mysql "docker-entrypoint.s…" 3 seconds ago Up 2 seconds 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" 17 minutes ago Up 17 minutes c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker logs db

2021-10-03 16:49:41+00:00 [Note] [Entrypoint]: Entrypoint script for MySQL Server 8.0.26-1debian10 started.

2021-10-03 16:49:41+00:00 [Note] [Entrypoint]: Switching to dedicated user 'mysql'

2021-10-03 16:49:41+00:00 [Note] [Entrypoint]: Entrypoint script for MySQL Server 8.0.26-1debian10 started.

2021-10-03 16:49:41+00:00 [Note] [Entrypoint]: Initializing database files

2021-10-03T16:49:41.391137Z 0 [System] [MY-013169] [Server] /usr/sbin/mysqld (mysqld 8.0.26) initializing of server in progress as process 41

2021-10-03T16:49:41.400419Z 1 [System] [MY-013576] [InnoDB] InnoDB initialization has started.

2021-10-03T16:49:42.345302Z 1 [System] [MY-013577] [InnoDB] InnoDB initialization has ended.

2021-10-03T16:49:46.187521Z 0 [Warning] [MY-013746] [Server] A deprecated TLS version TLSv1 is enabled for channel mysql_main

2021-10-03T16:49:46.188871Z 0 [Warning] [MY-013746] [Server] A deprecated TLS version TLSv1.1 is enabled for channel mysql_main

2021-10-03T16:49:46.312124Z 6 [Warning] [MY-010453] [Server] root@localhost is created with an empty password ! Please consider switching off the --initialize-insecure option.

2021-10-03 16:49:55+00:00 [Note] [Entrypoint]: Database files initialized

2021-10-03 16:49:55+00:00 [Note] [Entrypoint]: Starting temporary server

mysqld will log errors to /var/lib/mysql/0a79be3ed7db.err

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

```bash

```

**nginx 安装**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -dit --restart=always -p 80 nginx

WARNING: IPv4 forwarding is disabled. Networking will not work.

c7570bd68368f3e4c9a4c8fdce67845bcb5fee12d1cc785d6e448979592a691e

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS

PORTS NAMES

c7570bd68368 nginx "/docker-entrypoint.…" 4 seconds ago Up 2 seconds 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" 20 minutes ago Up 20 minutes c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

## 4.管理容器的常见命令

|命令|描述|

|--|--|

|docker exec xxxx 命令|新的进程进入容器|

|docker start xxxx|启动容器|

|docker stop xxxxx|停止容器,容器在stop后ip会被释放调|

|docker restart xxxxx|重启容器,当需要重启服务的时候可以重启容器|

|docker top xxxxx|查看进程|

|docker logs -f node|日志|

|docker inspect 容器|容器详细信息,ip等|

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ mysql -uroot -pliruilong -h172.17.0.2 -P3306

ERROR 2059 (HY000): Authentication plugin 'caching_sha2_password' cannot be loaded: /usr/lib64/mysql/plugin/caching_sha2_password.so: cannot open shared object file: No such file or directory

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker exec -it db /bin/bash

root@0a79be3ed7db:/# mysql -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 14

Server version: 8.0.26 MySQL Community Server - GPL

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> ALTER USER 'root'@'%' IDENTIFIED BY 'password' PASSWORD EXPIRE NEVER;

Query OK, 0 rows affected (0.02 sec)

mysql> ALTER USER 'root'@'%' IDENTIFIED WITH mysql_native_password BY 'liruilong';

Query OK, 0 rows affected (0.01 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.01 sec)

mysql> exit

Bye

root@0a79be3ed7db:/# eixt

bash: eixt: command not found

root@0a79be3ed7db:/# exit

exit

┌──[root@liruilongs.github.io]-[~/docker]

└─$ mysql -uroot -pliruilong -h172.17.0.2 -P3306

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 15

Server version: 8.0.26 MySQL Community Server - GPL

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> use blog

Database changed

MySQL [blog]>

```

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker top db

UID PID PPID C STIME TTY TIME CMD

polkitd 15911 15893 1 00:49 ? 00:00:45 mysqld

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7570bd68368 nginx "/docker-entrypoint.…" 43 minutes ago Up 43 minutes 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 46 minutes ago Up 46 minutes 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" About an hour ago Up About an hour c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker stop db

db

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7570bd68368 nginx "/docker-entrypoint.…" 43 minutes ago Up 43 minutes 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

97ffd93370d4 centos "/bin/bash" About an hour ago Up About an hour c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker start db

db

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7570bd68368 nginx "/docker-entrypoint.…" 44 minutes ago Up 44 minutes 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 47 minutes ago Up 2 seconds 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" About an hour ago Up About an hour c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker restart db

db

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7570bd68368 nginx "/docker-entrypoint.…" 44 minutes ago Up 44 minutes 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 47 minutes ago Up 2 seconds 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" About an hour ago Up About an hour c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

**删除所有容器**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker ps | grep -v IMAGE

5b3557283314 nginx "/docker-entrypoint.…" About an hour ago Up About an hour 80/tcp web

c7570bd68368 nginx "/docker-entrypoint.…" 9 hours ago Up 9 hours 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 9 hours ago Up 8 hours 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" 9 hours ago Up 9 hours c3

┌──[root@liruilongs.github.io]-[~]

└─$ docker ps | grep -v IMAGE | awk '{print $1}'

5b3557283314

c7570bd68368

0a79be3ed7db

97ffd93370d4

┌──[root@liruilongs.github.io]-[~]

└─$ docker ps | grep -v IMAGE | awk '{print $1}'| xargs docker rm -f

5b3557283314

c7570bd68368

0a79be3ed7db

97ffd93370d4

┌──[root@liruilongs.github.io]-[~]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

┌──[root@liruilongs.github.io]-[~]

└─$

```

## 5.数据卷的使用

|命令|描述|

|--|--|

|docker run -dit --restart=always -v p_path1:c_path2 镜像名 命令|与端口映射类似,直接映射宿主机目录|

|docker run -dit --restart=always -v c_path2 镜像名 命令|当只写了一个的时候,可以通过 `docker inspect`来查看映射,mounts属性|

|docker volume create v1|自定共享卷,然后挂载|

**数据会被写到容器层,删除容器,容器数据也会删除**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7570bd68368 nginx "/docker-entrypoint.…" 44 minutes ago Up 44 minutes 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 47 minutes ago Up 2 seconds 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" About an hour ago Up About an hour c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$ find / -name liruilong.html

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker exec -it c7570bd68368 /bin/bash

root@c7570bd68368:/# echo "liruilong" > liruilong.html

root@c7570bd68368:/# exit

exit

┌──[root@liruilongs.github.io]-[~/docker]

└─$ find / -name liruilong.html

/var/lib/docker/overlay2/56de0e042c7c5b9704df156b6473b528ca7468d8b1085cb43294f9111b270540/diff/liruilong.html

/var/lib/docker/overlay2/56de0e042c7c5b9704df156b6473b528ca7468d8b1085cb43294f9111b270540/merged/liruilong.html

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

**docker run -itd --name=web -v /root/docker/liruilong:/liruilong:rw nginx**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7570bd68368 nginx "/docker-entrypoint.…" 8 hours ago Up 8 hours 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 8 hours ago Up 7 hours 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" 8 hours ago Up 8 hours

c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker rm -f web

web

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -itd --name=web -v /root/docker/liruilong:/liruilong:rw nginx

WARNING: IPv4 forwarding is disabled. Networking will not work.

5949fba8c9c810ed3a06fcf1bc8148aef22893ec99450cec2443534b2f9eb063

┌──[root@liruilongs.github.io]-[~/docker]

└─$ ls

all.tar docker_images_util_202110032229_UCPY4C5k.sh liruilong

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5949fba8c9c8 nginx "/docker-entrypoint.…" 57 seconds ago Up 4 seconds 80/tcp web

c7570bd68368 nginx "/docker-entrypoint.…" 8 hours ago Up 8 hours 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 8 hours ago Up 7 hours 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" 8 hours ago Up 8 hours c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker exec -it web /bin/bash

root@5949fba8c9c8:/# ls

bin docker-entrypoint.d home liruilong opt run sys var

boot docker-entrypoint.sh lib media proc sbin tmp

dev etc lib64 mnt root srv usr

root@5949fba8c9c8:/#

```

**docker volume create v1**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker volume list

DRIVER VOLUME NAME

local 9e939eda6c4d8c574737905857d57014a1c4dda10eef77520e99804c7c67ac39

local 34f699eb0535315b651090afd90768f4e4cfa42acf920753de9015261424812c

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker volume create v1

v1

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker volume list

DRIVER VOLUME NAME

local 9e939eda6c4d8c574737905857d57014a1c4dda10eef77520e99804c7c67ac39

local 34f699eb0535315b651090afd90768f4e4cfa42acf920753de9015261424812c

local v1

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker volume inspect v1

[

{

"CreatedAt": "2021-10-04T08:46:55+08:00",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/v1/_data",

"Name": "v1",

"Options": {},

"Scope": "local"

}

]

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -itd --name=web -v v1:/liruilong:ro nginx

WARNING: IPv4 forwarding is disabled. Networking will not work.

5b3557283314d5ab745855f3827d070559cd3340f6a2d5a420941e717dc2145b

┌──[root@liruilongs.github.io]-[~/docker]

└─$ ls

all.tar docker_images_util_202110032229_UCPY4C5k.sh liruilong

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker exec -it web bash

root@5b3557283314:/# touch /liruilong/liruilong.sql

touch: cannot touch '/liruilong/liruilong.sql': Read-only file system

root@5b3557283314:/# exit

exit

┌──[root@liruilongs.github.io]-[~/docker]

└─$ touch /var/lib/docker/volumes/v1/_data/liruilong.sql

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker exec -it web bash

root@5b3557283314:/# ls /liruilong/

liruilong.sql

root@5b3557283314:/#

```

**宿主机可以看到容器中的进程**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ ps aux | grep -v grep | grep mysqld

polkitd 16727 1.6 9.6 1732724 388964 pts/0 Ssl+ 06:48 2:10 mysqld

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5b3557283314 nginx "/docker-entrypoint.…" 12 minutes ago Up 12 minutes 80/tcp web

c7570bd68368 nginx "/docker-entrypoint.…" 8 hours ago Up 8 hours 0.0.0.0:49153->80/tcp, :::49153->80/tcp jovial_solomon

0a79be3ed7db mysql "docker-entrypoint.s…" 8 hours ago Up 7 hours 3306/tcp, 33060/tcp db

97ffd93370d4 centos "/bin/bash" 8 hours ago Up 8 hours c3

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

## 6.docker网络管理

|命令|描述|

|--|--|

|docker network list|查看所有的网卡|

|docker network inspect 6f70229c85f0|查看网卡信息|

|man -k docker|帮助手册|

|man docker-network-create|创建网络|

|docker network create -d bridge --subnet=10.0.0.0/24 mynet|创建网络|

|docker run --net=mynet --rm -it centos /bin/bash|指定网络|

|docker run -dit -p 物理机端口:容器端口 镜像|指定端口|

|echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf;sysctl -p |NAT方式,需要开启路由转发|

|echo 1 > /proc/sys/net/ipv4/ip_forward |NAT方式,需要开启路由转发。两种都可以|

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ ifconfig docker0 # 桥接网卡

docker0: flags=4163 mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:38ff:fee1:6cb2 prefixlen 64 scopeid 0x20

ether 02:42:38:e1:6c:b2 txqueuelen 0 (Ethernet)

RX packets 54 bytes 4305 (4.2 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 74 bytes 5306 (5.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

┌──[root@liruilongs.github.io]-[~]

└─$ docker network inspect bridge

[

{

"Name": "bridge",

"Id": "ebc5c96c853aa5271006387393b3b2dddcbfbc3b6f1f9ecba44bf87f550ed134",

"Created": "2021-09-26T02:07:56.019076931+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"0a79be3ed7dbd9bdf19202cda74aa3b3db818bd23deca23248404c673c7e1ff7": {

"Name": "db",

"EndpointID": "8fe3dbabc838c14a6e23990abd860824d505d49bd437d47c45a85eed06de2aba",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"5b3557283314d5ab745855f3827d070559cd3340f6a2d5a420941e717dc2145b": {

"Name": "web",

"EndpointID": "3f52014a93e20c1f71fff7bda51a169648db932a72101e06d2c33633ac778c5b",

"MacAddress": "02:42:ac:11:00:05",

"IPv4Address": "172.17.0.5/16",

"IPv6Address": ""

},

"97ffd93370d4e23e6a3d2e6a0c68030d482cabb8ab71b5ceffb4d703de3a6b0c": {

"Name": "c3",

"EndpointID": "3dca7f002ebf82520ecc0b28ef4e19cd3bc867d1af9763b9a4969423b4e2a5f6",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"c7570bd68368f3e4c9a4c8fdce67845bcb5fee12d1cc785d6e448979592a691e": {

"Name": "jovial_solomon",

"EndpointID": "56be0daa5a7355201a0625259585561243a4ce1f37736874396a3fb0467f26fe",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

┌──[root@liruilongs.github.io]-[~]

└─$

```

**创建网络**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker network create -d bridge --subnet=10.0.0.0/24 mynet

4b3da203747c7885a7942ace7c72a2fdefd2f538256cfac1a545f7fd3a070dc5

┌──[root@liruilongs.github.io]-[~]

└─$ ifconfig

br-4b3da203747c: flags=4099 mtu 1500

inet 10.0.0.1 netmask 255.255.255.0 broadcast 10.0.0.255

ether 02:42:f4:31:01:9f txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 648 (648.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

```

**指定网络运行容器**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker history busybox:latest

IMAGE CREATED CREATED BY SIZE COMMENT

16ea53ea7c65 2 weeks ago /bin/sh -c #(nop) CMD ["sh"] 0B

2 weeks ago /bin/sh -c #(nop) ADD file:c9e0c3d3badfd458c… 1.24MB

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name=c1 busybox

WARNING: IPv4 forwarding is disabled. Networking will not work.

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02

inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # exit

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name=c2 --network=mynet busybox

WARNING: IPv4 forwarding is disabled. Networking will not work.

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02

inet addr:10.0.0.2 Bcast:10.0.0.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:13 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1086 (1.0 KiB) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # exit

┌──[root@liruilongs.github.io]-[~]

└─$

```

**配置路由转发**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ cat /proc/sys/net/ipv4/ip_forward

0

┌──[root@liruilongs.github.io]-[~]

└─$ cat /etc/sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

┌──[root@liruilongs.github.io]-[~]

└─$ echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf;sysctl -p

net.ipv4.ip_forward = 1

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name=c2 --network=mynet busybox

/ # ping www.baidu.com

PING www.baidu.com (220.181.38.150): 56 data bytes

64 bytes from 220.181.38.150: seq=0 ttl=127 time=34.047 ms

64 bytes from 220.181.38.150: seq=1 ttl=127 time=20.363 ms

64 bytes from 220.181.38.150: seq=2 ttl=127 time=112.075 ms

^C

--- www.baidu.com ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 20.363/55.495/112.075 ms

/ # exit

┌──[root@liruilongs.github.io]-[~]

└─$ cat /proc/sys/net/ipv4/ip_forward

1

┌──[root@liruilongs.github.io]-[~]

└─$

```

****使用容器搭建wrodpress博客****

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps | grep -v IMAGE | awk '{print $1}'| xargs docker rm -f

1ce97e8dc071

0d435b696a7e

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -dit --name=db --restart=always -v $PWD/db:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=liruilong -e WORDPRESS_DATABASE=wordpress hub.c.163.com/library/mysql

8605e77f8d50223f52619e6e349085566bc53a7e74470ac0a44340620f32abe8

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8605e77f8d50 hub.c.163.com/library/mysql "docker-entrypoint.s…" 6 seconds ago Up 4 seconds 3306/tcp db

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -itd --name=blog --restart=always -v $PWD/blog:/var/www/html -p 80 -e WORDPRESS_DB_HOST=172.17.0.2 -e WORDPRESS_DB_USER=root -e WORDPRESS_DB_PASSWORD=liruilong -e WORDPRESS_DB_NAME=wordpress hub.c.163.com/library/wordpr

ess

a90951cdac418db85e9dfd0e0890ec1590765c5770faf9893927a96ea93da9f5

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a90951cdac41 hub.c.163.com/library/wordpress "docker-entrypoint.s…" 3 seconds ago Up 2 seconds 0.0.0.0:49271->80/tcp, :::49271->80/tcp blog

8605e77f8d50 hub.c.163.com/library/mysql "docker-entrypoint.s…" 2 minutes ago Up 2 minutes 3306/tcp db

┌──[root@liruilongs.github.io]-[~/docker]

└─$

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

**容器网络配置**

|模式|描述|

|--|--|

|bridge|桥接模式|同一个网段的网络,相当于使用交换机连接,这里宿主机使用docker0网卡桥接,默认模式|

|host |主机模式|共享宿主机网络空间|

|none |隔离模式|于宿主机隔离,不同单独的网络|

**docker network list**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker network list

NETWORK ID NAME DRIVER SCOPE

ebc5c96c853a bridge bridge local

25037835956b host host local

ba07e9427974 none null local

```

**bridge,桥接模式**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name c1 centos /bin/bash

[root@62043df180e4 /]# ifconfig

bash: ifconfig: command not found

[root@62043df180e4 /]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

17: eth0@if18: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.4/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@62043df180e4 /]# exit

exit

```

**host,共享宿主机网络空间**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name c1 --network host centos /bin/bash

[root@liruilongs /]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c9:6f:ae brd ff:ff:ff:ff:ff:ff

inet 192.168.26.55/24 brd 192.168.26.255 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fec9:6fae/64 scope link

valid_lft forever preferred_lft forever

3: br-4b3da203747c: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:8e:25:1b:19 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.1/24 brd 10.0.0.255 scope global br-4b3da203747c

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:0a:63:cf:de brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:aff:fe63:cfde/64 scope link

valid_lft forever preferred_lft forever

14: veth9f0ef36@if13: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 16:2f:a6:23:3b:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::142f:a6ff:fe23:3b88/64 scope link

valid_lft forever preferred_lft forever

16: veth37a0e67@if15: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 56:b4:1b:74:cf:3f brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::54b4:1bff:fe74:cf3f/64 scope link

valid_lft forever preferred_lft forever

[root@liruilongs /]# exit

exit

```

**none:于宿主机隔离,不同的单独的网络**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name c1 --network none centos /bin/bash

[root@7f955d36625e /]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

[root@7f955d36625e /]# exit

exit

┌──[root@liruilongs.github.io]-[~]

└─$

```

### 容器互联

|||

|--|--|

|docker run -it --rm --name=h1 centos /bin/bash|创建一个容器h1|

|再创建一个容器h2,和h1通信有两种方式||

|docker inspect h1 | grep -i ipaddr|查看 ip|

|docker run -it --rm --name=h2 centos ping 172.17.0.4| |

|docker run -it --rm --name=h2 --link h1:h1 centos ping h1||

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name=h1 centos /bin/bash

[root@207dbbda59af /]#

```

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker inspect h1 | grep -i ipaddr

"SecondaryIPAddresses": null,

"IPAddress": "172.17.0.4",

"IPAddress": "172.17.0.4",

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name=h2 centos ping -c 3 172.17.0.4

PING 172.17.0.4 (172.17.0.4) 56(84) bytes of data.

64 bytes from 172.17.0.4: icmp_seq=1 ttl=64 time=0.284 ms

64 bytes from 172.17.0.4: icmp_seq=2 ttl=64 time=0.098 ms

64 bytes from 172.17.0.4: icmp_seq=3 ttl=64 time=0.142 ms

--- 172.17.0.4 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 0.098/0.174/0.284/0.080 ms

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name=h2 --link h1:h1 centos ping -c 3 h1

PING h1 (172.17.0.4) 56(84) bytes of data.

64 bytes from h1 (172.17.0.4): icmp_seq=1 ttl=64 time=0.124 ms

64 bytes from h1 (172.17.0.4): icmp_seq=2 ttl=64 time=0.089 ms

64 bytes from h1 (172.17.0.4): icmp_seq=3 ttl=64 time=0.082 ms

--- h1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2002ms

rtt min/avg/max/mdev = 0.082/0.098/0.124/0.020 ms

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -it --rm --name=h2 --link h1 centos ping -c 3 h1

PING h1 (172.17.0.4) 56(84) bytes of data.

64 bytes from h1 (172.17.0.4): icmp_seq=1 ttl=64 time=0.129 ms

64 bytes from h1 (172.17.0.4): icmp_seq=2 ttl=64 time=0.079 ms

64 bytes from h1 (172.17.0.4): icmp_seq=3 ttl=64 time=0.117 ms

--- h1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.079/0.108/0.129/0.022 ms

┌──[root@liruilongs.github.io]-[~]

└─$

```

**使用容器搭建wrodpress博客:简单的方式**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -dit --name=db --restart=always -v $PWD/db:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=liruil

ong -e WORDPRESS_DATABASE=wordpress hub.c.163.com/library/mysql

c4a88590cb21977fc68022501fde1912d0bb248dcccc970ad839d17420b8b08d

┌──[root@liruilongs.github.io]-[~]

└─$ docker run -dit --name blog --link=db:mysql -p 80:80 hub.c.163.com/library/wordpress

8a91caa1f9fef1575cc38788b0e8739b7260729193cf18b094509dcd661f544b

┌──[root@liruilongs.github.io]-[~]

└─$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8a91caa1f9fe hub.c.163.com/library/wordpress "docker-entrypoint.s…" 6 seconds ago Up 4 seconds 0.0.0.0:80->80/tcp, :::80->80/tcp blog

c4a88590cb21 hub.c.163.com/library/mysql "docker-entrypoint.s…" About a minute ago Up About a minute 3306/tcp db

┌──[root@liruilongs.github.io]-[~]

```

**这几使用了容器链接的方式,默认别名为 mysql;可以看看镜像说明。**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ docker exec -it db env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=c4a88590cb21

TERM=xterm

MYSQL_ROOT_PASSWORD=liruilong

WORDPRESS_DATABASE=wordpress

GOSU_VERSION=1.7

MYSQL_MAJOR=5.7

MYSQL_VERSION=5.7.18-1debian8

HOME=/root

┌──[root@liruilongs.github.io]-[~]

└─$ docker exec -it blog env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=8a91caa1f9fe

TERM=xterm

MYSQL_PORT=tcp://172.17.0.2:3306

MYSQL_PORT_3306_TCP=tcp://172.17.0.2:3306

MYSQL_PORT_3306_TCP_ADDR=172.17.0.2

MYSQL_PORT_3306_TCP_PORT=3306

MYSQL_PORT_3306_TCP_PROTO=tcp

MYSQL_NAME=/blog/mysql

MYSQL_ENV_MYSQL_ROOT_PASSWORD=liruilong

MYSQL_ENV_WORDPRESS_DATABASE=wordpress

MYSQL_ENV_GOSU_VERSION=1.7

MYSQL_ENV_MYSQL_MAJOR=5.7

MYSQL_ENV_MYSQL_VERSION=5.7.18-1debian8

PHPIZE_DEPS=autoconf dpkg-dev file g++ gcc libc-dev libpcre3-dev make pkg-config re2c

PHP_INI_DIR=/usr/local/etc/php

APACHE_CONFDIR=/etc/apache2

APACHE_ENVVARS=/etc/apache2/envvars

PHP_EXTRA_BUILD_DEPS=apache2-dev

PHP_EXTRA_CONFIGURE_ARGS=--with-apxs2

PHP_CFLAGS=-fstack-protector-strong -fpic -fpie -O2

PHP_CPPFLAGS=-fstack-protector-strong -fpic -fpie -O2

PHP_LDFLAGS=-Wl,-O1 -Wl,--hash-style=both -pie

GPG_KEYS=0BD78B5F97500D450838F95DFE857D9A90D90EC1 6E4F6AB321FDC07F2C332E3AC2BF0BC433CFC8B3

PHP_VERSION=5.6.31

PHP_URL=https://secure.php.net/get/php-5.6.31.tar.xz/from/this/mirror

PHP_ASC_URL=https://secure.php.net/get/php-5.6.31.tar.xz.asc/from/this/mirror

PHP_SHA256=c464af61240a9b7729fabe0314cdbdd5a000a4f0c9bd201f89f8628732fe4ae4

PHP_MD5=

WORDPRESS_VERSION=4.8.1

WORDPRESS_SHA1=5376cf41403ae26d51ca55c32666ef68b10e35a4

HOME=/root

┌──[root@liruilongs.github.io]-[~]

└─$

```

## 7.自定义镜像

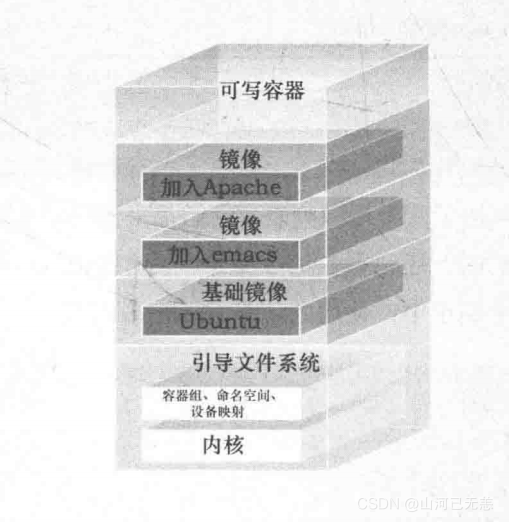

`Docker`镜像是由文件系统叠加而成,底端是一个引导文件系统` bootfs`。`Docker`用户几乎永远不会和引导文件交互。实际上,当一个容器启动.后,它将会被移到内存中,而引导文件系统则会被卸载(unmount),以留出更多的内存供`initrd磁盘镜像`使用。

`Docker`看起来还很像一个典型的`Linux虚拟化栈`。实际, Docker镜像的第二层是`root文件系统rootfs`, 位于引导文件系统之上。

`rootfs`可以或多种操作系如`Debian`或者`ubuntu`文件系统)。在传统的Linux引导过程中, root文件系统会最先以`只读的方式加载`,当引导结束并完成了`完整性检查之后`,它才会被切换为`读写模式`是在`Docker`里, `root文件`系统永远只能是`只读状态`,并且`Docker`利用`联合加载`(union mount)技术又会在`root文件系统层`上加载更多的`只读文件系统`。

联合加载是指同时加载多个文件系统,但是在外面看起术只能看到只有一个文件系统。`联合加载`会将各层文件系统叠加到一起。

`Docker`将这样的`文件系统`称为`镜像`。一个`镜像`可以放到另一个`镜像`的顶部。位于下面的`镜像`称为`父镜像(parent image)`,可以依次类推,直到镜像栈的最底部,最底部的镜像称为`基础镜像(base image)`,最后,当从一个镜像启动容器时, `Docker`会在该镜像的最顶层加载一个`读写文件系统`。我们想在Docker中运行的程序就是在这个`读写层中执行`的。

当`Docker`第一次`启动一个容器`时,初始的`读写层`是`空`的。当文件系统发生变化时,这些变化都会应用到这一层上。比如,如果想`修改一个文件`

+ 这个`文件`首先会从该读写层下面的`只读层复制到该读写层`。该文件的只读版本依然存在,但是已经被读写层中的该文件副本所隐藏。通常这种机制被称为`写时复制(copy on write)`,这也是使Docker如此强大的技术之一。

+ 每个`只读镜像层`都是`只读`的,并且以后永远不会变化。当`创建一个新容器`时, `Docker会构建出一个镜像栈`,并在`栈`的`最顶端添加一个读写层`。这个读写层再加上其下面的镜像层以及一些配置数据,就构成了一个容器。

***

|命令|

|--|

|docker build -t v4 . -f filename|

|docker build -t name .|

**CMD 作用**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -it --rm --name c1 centos_ip_2

[root@4683bca411ec /]# exit

exit

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -it --rm --name c1 centos_ip_2 /bin/bash

[root@08e12bb46bcd /]# exit

exit

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker run -it --rm --name c1 centos_ip_2 echo liruilong

liruilong

```

**层数越小,占用内存越小,每一个RUN命令是一层,尽量写在一层**。

```

┌──[root@liruilongs.github.io]-[~/docker]

└─$ cat Dockerfile

FROM hub.c.163.com/library/centos

MAINTAINER liruilong

RUN yum -y install net-tools && \

yum -y install iproute -y

CMD ["/bin/bash"]

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

**使用yum命令时,最好使用 `yum clean all `清除一下缓存**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker images | grep centos_

centos_ip_3 latest 93e0d06f7dd5 3 minutes ago 216MB

centos_ip_2 latest 8eea343337d7 6 minutes ago 330MB

┌──[root@liruilongs.github.io]-[~/docker]

└─$ cat Dockerfile

FROM hub.c.163.com/library/centos

MAINTAINER liruilong

RUN yum -y install net-tools && \

yum -y install iproute -y && \

yum clean all

CMD ["/bin/bash"]

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

**COPY和ADD的意思是一样,ADD带有自动解压功能,COPY没有自动解压功能**

**构建一个Nginx镜像**

```bash

FROM centos

MAINTAINER liruilong

RUN yum -y install nginx && \

yum clean all

EXPOSE 80

CMD ["nginx", "-g","daemon off;"]

```

构建一个开启SSH的镜像

```bash

```

## 8.配置docker本地仓库

|配置docker本地仓库|

|--|

|docker pull registry|

|docker run -d --name registry -p 5000:5000 --restart=always -v /myreg:/var/lib/registry registry|

**安装仓库镜像**

```bash

┌──[root@vms56.liruilongs.github.io]-[~]

└─#yum -y install docker-ce

Loaded plugins: fastestmirror

kubernetes/signature | 844 B 00:00:00

Retrieving key from https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

Importing GPG key 0x307EA071:

Userid : "Rapture Automatic Signing Key (cloud-rapture-signing-key-2021-03-01-08_01_09.pub)"

Fingerprint: 7f92 e05b 3109 3bef 5a3c 2d38 feea 9169 307e a071

From : https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

Retrieving key from https://mirrors.aliyun.com/kubernetes/yum/doc/

.................

Complete!

┌──[root@vms56.liruilongs.github.io]-[~]

└─#sudo tee /etc/docker/daemon.json <<-'EOF'

> {

> "registry-mirrors": ["https://2tefyfv7.mirror.aliyuncs.com"]

> }

> EOF

{

"registry-mirrors": ["https://2tefyfv7.mirror.aliyuncs.com"]

}

┌──[root@vms56.liruilongs.github.io]-[~]

└─#sudo systemctl daemon-reload

┌──[root@vms56.liruilongs.github.io]-[~]

└─#sudo systemctl restart docker

┌──[root@vms56.liruilongs.github.io]-[~]

└─#docker pull hub.c.163.com/library/registry:latest

latest: Pulling from library/registry

25728a036091: Pull complete

0da5d1919042: Pull complete

e27a85fd6357: Pull complete

d9253dc430fe: Pull complete

916886b856db: Pull complete

Digest: sha256:fce8e7e1569d2f9193f75e9b42efb07a7557fc1e9d2c7154b23da591e324f3d1

Status: Downloaded newer image for hub.c.163.com/library/registry:latest

hub.c.163.com/library/registry:latest

┌──[root@vms56.liruilongs.github.io]-[~]

└─#docker run -dit --name=myreg -p 5000:5000 -v $PWD/myreg:^Cr

┌──[root@vms56.liruilongs.github.io]-[~]

└─#docker history hub.c.163.com/library/registry:latest

IMAGE CREATED CREATED BY SIZE COMMENT

751f286bc25e 4 years ago /bin/sh -c #(nop) CMD ["/etc/docker/registr… 0B

4 years ago /bin/sh -c #(nop) ENTRYPOINT ["/entrypoint.… 0B

4 years ago /bin/sh -c #(nop) COPY file:7b57f7ab1a8cf85c… 155B

4 years ago /bin/sh -c #(nop) EXPOSE 5000/tcp 0B

4 years ago /bin/sh -c #(nop) VOLUME [/var/lib/registry] 0B

4 years ago /bin/sh -c #(nop) COPY file:6c4758d509045dc4… 295B

4 years ago /bin/sh -c #(nop) COPY file:b99d4fe47ad1addf… 22.8MB

4 years ago /bin/sh -c set -ex && apk add --no-cache… 5.61MB

4 years ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B

4 years ago /bin/sh -c #(nop) ADD file:89e72bfc19e81624b… 4.81MB

┌──[root@vms56.liruilongs.github.io]-[~]

└─#docker run -dit --name=myreg -p 5000:5000 -v $PWD/myreg:/var/lib/registry hub.c.163.com/library/registry

317bcc7bd882fd0d29cf9a2898e5cec4378431f029a796b9f9f643762679a14d

┌──[root@vms56.liruilongs.github.io]-[~]

└─#docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS

NAMES

317bcc7bd882 hub.c.163.com/library/registry "/entrypoint.sh /etc…" 5 seconds ago Up 3 seconds 0.0.0.0:5000->5000/tcp, :::5000->5000/tcp myreg

└─#

└─#

```

**selinux、防火墙设置**

```bash

┌──[root@vms56.liruilongs.github.io]-[~]

└─#getenforce

Disabled

┌──[root@vms56.liruilongs.github.io]-[~]

└─#systemctl status firewalld.service

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2021-10-06 12:57:44 CST; 15min ago

Docs: man:firewalld(1)

Main PID: 608 (firewalld)

Memory: 1.7M

CGroup: /system.slice/firewalld.service

└─608 /usr/bin/python -Es /usr/sbin/firewalld --nofork --nopid

Oct 06 13:05:18 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t nat -C PREROUTING -m addrtype --dst-type LOCAL -j DOCKER' fa...that name.

Oct 06 13:05:18 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t nat -C OUTPUT -m addrtype --dst-type LOCAL -j DOCKER ! --dst...that name.

Oct 06 13:05:18 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t filter -C FORWARD -o docker0 -j DOCKER' failed: iptables: No...that name.

Oct 06 13:05:18 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t filter -C FORWARD -o docker0 -m conntrack --ctstate RELATED,...t chain?).

Oct 06 13:05:18 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t filter -C FORWARD -j DOCKER-ISOLATION-STAGE-1' failed: iptab...that name.

Oct 06 13:05:18 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t filter -C DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -...that name.

Oct 06 13:05:18 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t filter -C DOCKER-ISOLATION-STAGE-2 -o docker0 -j DROP' faile...t chain?).

Oct 06 13:08:01 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t nat -C DOCKER -p tcp -d 0/0 --dport 5000 -j DNAT --to-destin...that name.

Oct 06 13:08:01 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t filter -C DOCKER ! -i docker0 -o docker0 -p tcp -d 172.17.0....t chain?).

Oct 06 13:08:01 vms56.liruilongs.github.io firewalld[608]: WARNING: COMMAND_FAILED: '/usr/sbin/iptables -w2 -t nat -C POSTROUTING -p tcp -s 172.17.0.2 -d 172.17.0.2 --dpor...that name.

Hint: Some lines were ellipsized, use -l to show in full.

┌──[root@vms56.liruilongs.github.io]-[~]

└─#systemctl disable firewalld.service --now

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

┌──[root@vms56.liruilongs.github.io]-[~]

└─#

```

**镜像push 协议设置**

```bash

┌──[root@liruilongs.github.io]-[~]

└─$ cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://2tefyfv7.mirror.aliyuncs.com"]

}

┌──[root@liruilongs.github.io]-[~]

└─$ vim /etc/docker/daemon.json

┌──[root@liruilongs.github.io]-[~]

└─$ cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://2tefyfv7.mirror.aliyuncs.com"],

"insecure-registries": ["192.168.26.56:5000"]

}

┌──[root@liruilongs.github.io]-[~]

└─$

┌──[root@liruilongs.github.io]-[~]

└─$ systemctl restart docker

┌──[root@liruilongs.github.io]-[~]

```

**API使用,查看脚本编写**

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ vim dockerimages.sh

┌──[root@liruilongs.github.io]-[~/docker]

└─$ sh dockerimages.sh 192.168.26.56

192.168.26.56:5000/db/mysql:v1

192.168.26.56:5000/os/centos:latest

┌──[root@liruilongs.github.io]-[~/docker]

└─$ curl http://192.168.26.56:5000/v2/_catalog

{"repositories":["db/mysql","os/centos"]}

┌──[root@liruilongs.github.io]-[~/docker]

└─$ curl -XGET http://192.168.26.56:5000/v2/_catalog

{"repositories":["db/mysql","os/centos"]}

┌──[root@liruilongs.github.io]-[~/docker]

└─$ curl -XGET http://192.168.26.56:5000/v2/os/centos/tags/list

{"name":"os/centos","tags":["latest"]}

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ cat dockerimages.sh

#!/bin/bash

file=$(mktemp)

curl -s $1:5000/v2/_catalog | jq | egrep -v '\{|\}|\[|]' | awk -F\" '{print $2}' > $file

while read aa ; do

tag=($(curl -s $1:5000/v2/$aa/tags/list | jq | egrep -v '\{|\}|\[|]|name' | awk -F\" '{print $2}'))

for i in ${tag[*]} ; do

echo $1:5000/${aa}:$i

done

done < $file

rm -rf $file

┌──[root@liruilongs.github.io]-[~/docker]

└─$ yum -y install jq

```

**删除本地仓库里的镜像**

```bash

curl https://raw.githubusercontent.com/burnettk/delete-docker-registry-image/master/delete_docker_registry_image.py | sudo tee /usr/local/bin/delete_docker_registry_image >/dev/null

sudo chmod a+x /usr/local/bin/delete_docker_registry_image

```

```bash

export REGISTRY_DATA_DIR=/opt/data/registry/docker/registry/v2

```

```bash

delete_docker_registry_image --image testrepo/awesomeimage --dry-run

delete_docker_registry_image --image testrepo/awesomeimage

delete_docker_registry_image --image testrepo/awesomeimage:supertag

```

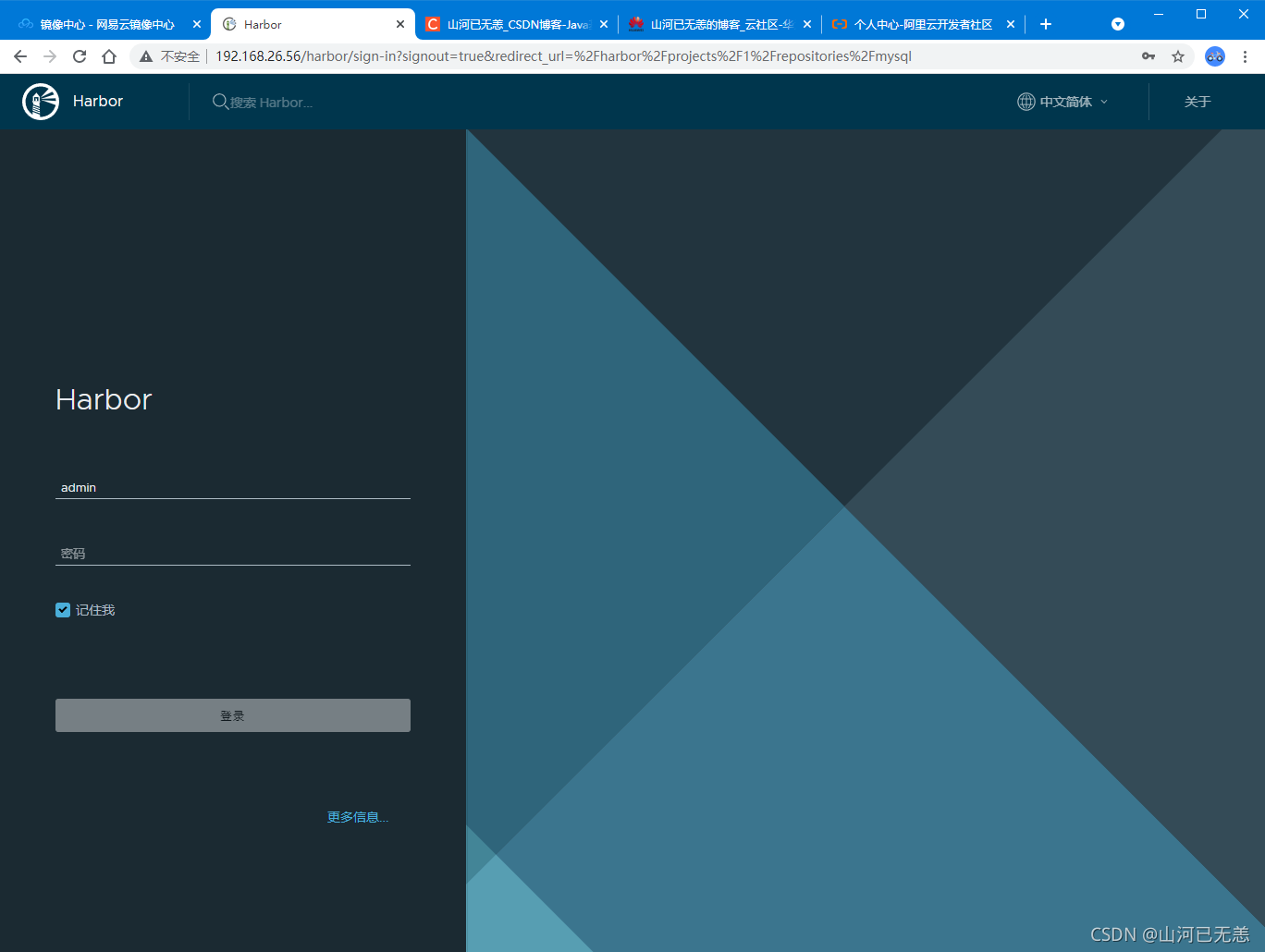

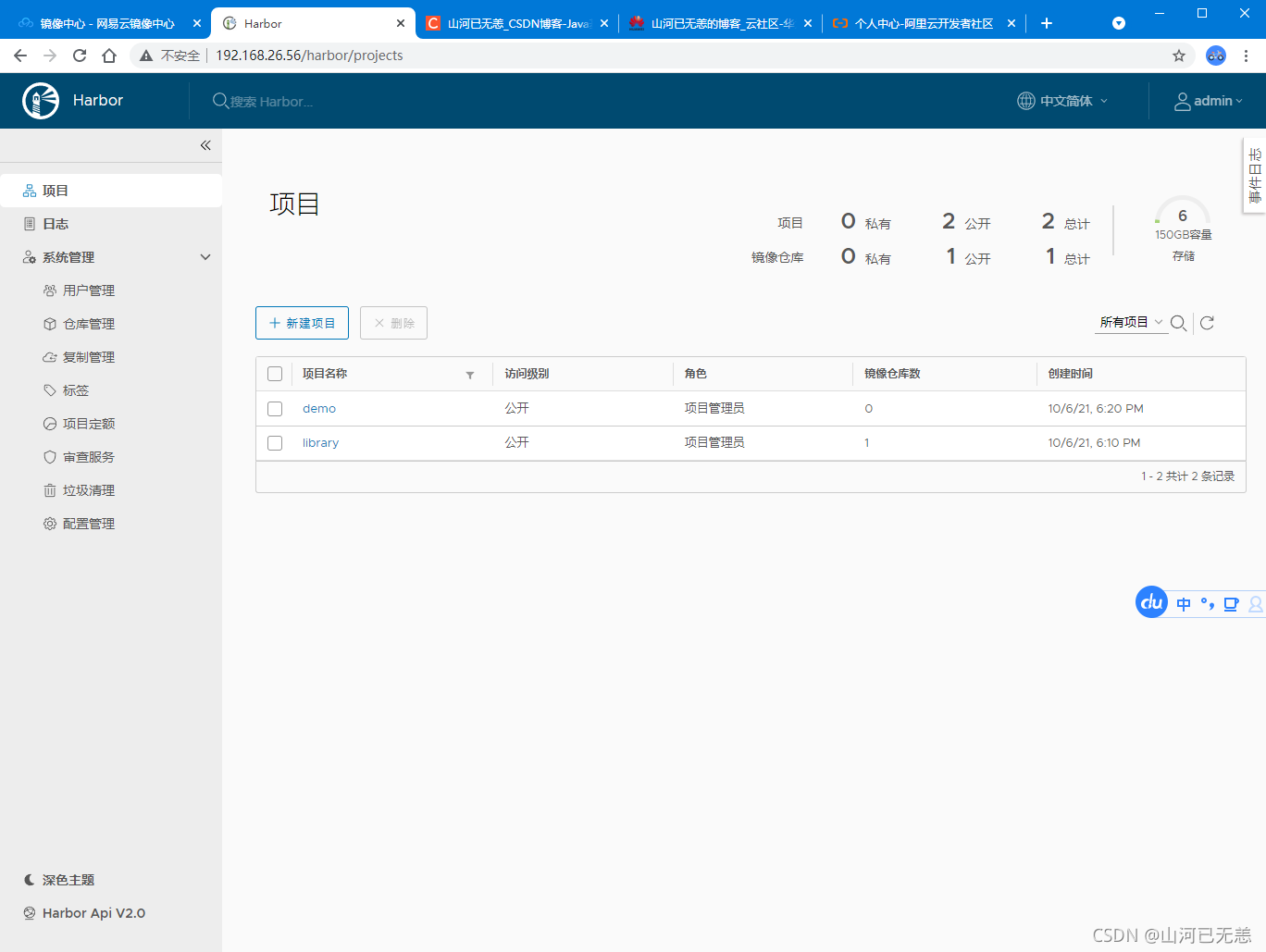

## 9.harbor的使用

|harbor的使用|

|--|

|安装并启动docker并安装docker-compose|

|上传harbor的离线包|

|导入harbor的镜像|

|编辑harbor.yml|

|修改hostname 为自己的主机名,不用证书需要注释掉https|

|harbor_admin_password 登录密码|

|安装compose|

|运行脚本 ./install.sh|

|在浏览器里输入IP|

|docker login IP --家目录下会有一个.docker文件夹|

```bash

┌──[root@vms56.liruilongs.github.io]-[~]

└─#yum install -y docker-compose

┌──[root@vms56.liruilongs.github.io]-[/]

└─#ls

bin dev harbor-offline-installer-v2.0.6.tgz lib machine-id mnt proc run srv tmp var

boot etc home lib64 media opt root sbin sys usr

┌──[root@vms56.liruilongs.github.io]-[/]

└─#tar zxvf harbor-offline-installer-v2.0.6.tgz

harbor/harbor.v2.0.6.tar.gz

harbor/prepare

harbor/LICENSE

harbor/install.sh

harbor/common.sh

harbor/harbor.yml.tmpl

┌──[root@vms56.liruilongs.github.io]-[/]

└─#docker load -i harbor/harbor.v2.0.6.tar.gz

```

**修改配置文件**

```bash

┌──[root@vms56.liruilongs.github.io]-[/]

└─#cd harbor/

┌──[root@vms56.liruilongs.github.io]-[/harbor]

└─#ls

common.sh harbor.v2.0.6.tar.gz harbor.yml.tmpl install.sh LICENSE prepare

┌──[root@vms56.liruilongs.github.io]-[/harbor]

└─#cp harbor.yml.tmpl harbor.yml

┌──[root@vms56.liruilongs.github.io]-[/harbor]

└─#ls

common.sh harbor.v2.0.6.tar.gz harbor.yml harbor.yml.tmpl install.sh LICENSE prepare

┌──[root@vms56.liruilongs.github.io]-[/harbor]

└─#vim harbor.yml

┌──[root@vms56.liruilongs.github.io]-[/harbor]

└─#

```

**harbor.yml**

```bash

4 # DO NOT use localhost or 127.0.0.1, because Harbor needs to be accessed by external clients.

5 hostname: 192.168.26.56

6

7 # http related config

.......

12 # https related config

13 #https:

14 # https port for harbor, default is 443

15 # port: 443

16 # The path of cert and key files for nginx

17 # certificate: /your/certificate/path

18 # private_key: /your/private/key/path

....

33 # Remember Change the admin password from UI after launching Harbor.

34 harbor_admin_password: Harbor12345

35

36 # Harbor DB configuration

```

**./prepare && ./install.sh**

```bash

┌──[root@vms56.liruilongs.github.io]-[/harbor]

└─#./prepare

prepare base dir is set to /harbor

WARNING:root:WARNING: HTTP protocol is insecure. Harbor will deprecate http protocol in the future. Please make sure to upgrade to https

Generated configuration file: /config/log/logrotate.conf

Generated configuration file: /config/log/rsyslog_docker.conf

Generated configuration file: /config/nginx/nginx.conf

Generated configuration file: /config/core/env

Generated configuration file: /config/core/app.conf

Generated configuration file: /config/registry/config.yml

Generated configuration file: /config/registryctl/env

Generated configuration file: /config/registryctl/config.yml

Generated configuration file: /config/db/env

Generated configuration file: /config/jobservice/env

Generated configuration file: /config/jobservice/config.yml

Generated and saved secret to file: /data/secret/keys/secretkey

Successfully called func: create_root_cert

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

┌──[root@vms56.liruilongs.github.io]-[/harbor]

└─#./install.sh

[Step 0]: checking if docker is installed ...

Note: docker version: 20.10.9

[Step 1]: checking docker-compose is installed ...

```

|harbor|

|--|

||

||

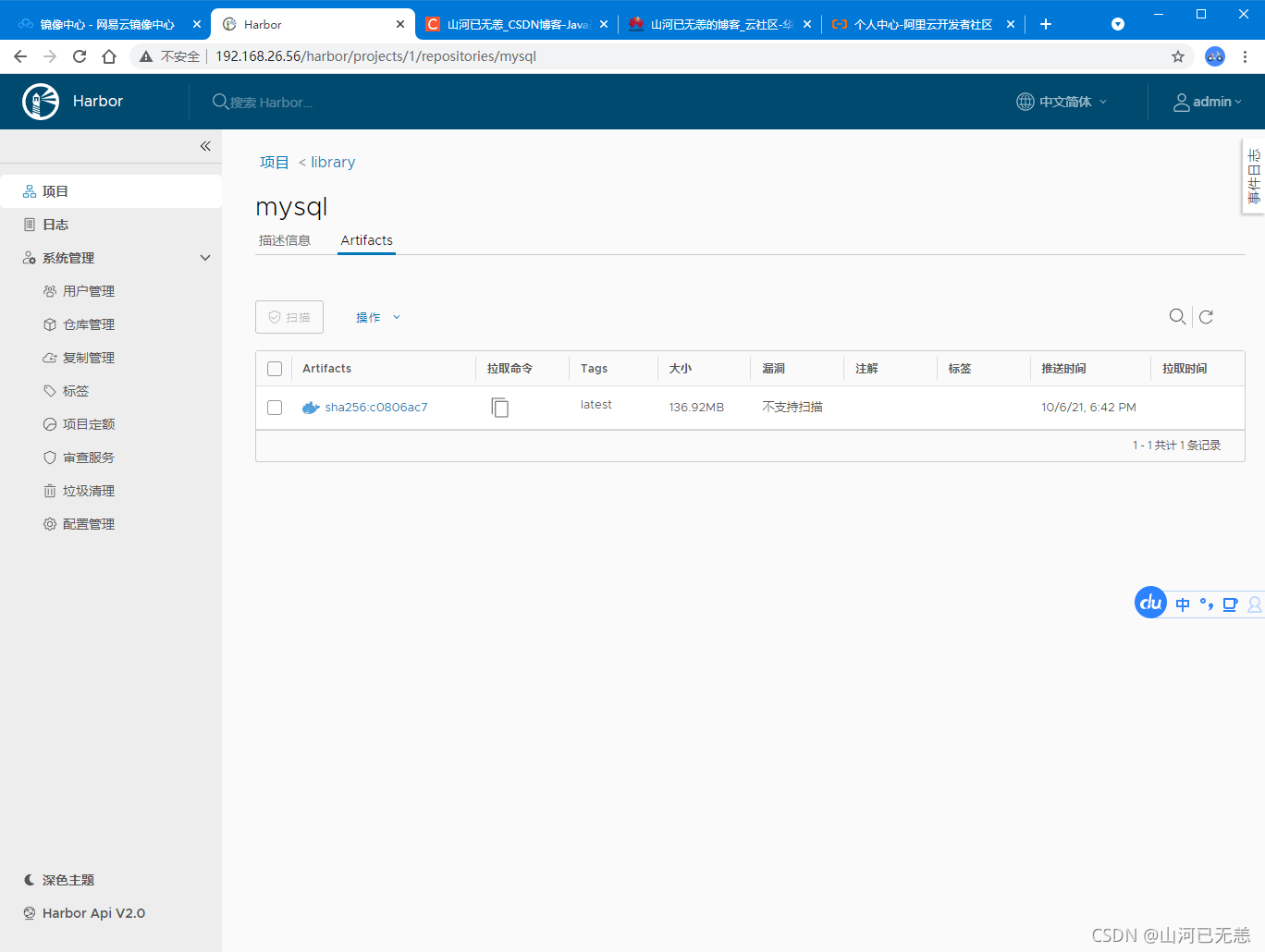

```bash

┌──[root@liruilongs.github.io]-[~/docker]

└─$ docker push 192.168.26.56/library/mysql

Using default tag: latest

The push refers to repository [192.168.26.56/library/mysql]

8129a85b4056: Pushed

3c376267ac82: Pushed

fa9efdcb088a: Pushed

9e615ff77b4f: Pushed

e5de8ba20fae: Pushed

2bee3420217b: Pushed

904af8e2b2d5: Pushed

daf31ec3573d: Pushed

da4155a7d640: Pushed

3b7c5f5acc82: Pushed

295d6a056bfd: Pushed

latest: digest: sha256:c0806ac73235043de2a6cb4738bb2f6a74f71d9c7aa0f19c8e7530fd6c299e75 size: 2617

┌──[root@liruilongs.github.io]-[~/docker]

└─$

```

|harbor|

|--|

||

## 10.限制容器资源

|使用Cgroup限制资源|

|--|

|docker run -itd --name=c3 --cpuset-cpus 0 -m 200M centos|

|docker run -itd --name=c2 -m 200M centos|

**了解Cgroup的使用**

+ **对内存的限制**

```bash

/etc/systemd/system/memload.service.d

cat 00-aa.conf

[Service]

MemoryLimit=512M

```

+ **对CPU亲和性限制**

```bash

ps mo pid,comm,psr $(pgrep httpd)

/etc/systemd/system/httpd.service.d

cat 00-aa.conf

[Service]

CPUAffinity=0

```

**容器如何限制**

```bash

┌──[root@liruilongs.github.io]-[/]

└─$ docker exec -it c1 bash

[root@55e45b34d93d /]# ls

bin etc lib lost+found mnt proc run srv tmp var

dev home lib64 media opt root sbin sys usr

[root@55e45b34d93d /]# cd opt/

[root@55e45b34d93d opt]# ls

memload-7.0-1.r29766.x86_64.rpm

[root@55e45b34d93d opt]# rpm -ivh memload-7.0-1.r29766.x86_64.rpm

Verifying... ################################# [100%]

Preparing... ################################# [100%]

Updating / installing...

1:memload-7.0-1.r29766 ################################# [100%]

[root@55e45b34d93d opt]# exit

exit

┌──[root@liruilongs.github.io]-[/]

└─$ docker stats

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

55e45b34d93d c1 0.00% 8.129MiB / 3.843GiB 0.21% 648B / 0B 30.4MB / 11.5MB 1

```

```bash

[root@55e45b34d93d /]# memload 1000

Attempting to allocate 1000 Mebibytes of resident memory...

^C

[root@55e45b34d93d /]#

┌──[root@liruilongs.github.io]-[/]

└─$ docker stats

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

55e45b34d93d c1 0.02% 165.7MiB / 3.843GiB 4.21% 648B / 0B 30.5MB / 11.5MB 3

```

**内存限制**

```bash

┌──[root@liruilongs.github.io]-[/]

└─$ docker run -itd --name=c2 -m 200M centos

3b2df1738e84159f4fa02dadbfc285f6da8ddde4d94cb449bc775c9a70eaa4ea

┌──[root@liruilongs.github.io]-[/]

└─$ docker stats

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

3b2df1738e84 c2 0.00% 528KiB / 200MiB 0.26% 648B / 0B 0B / 0B 1

55e45b34d93d c1 0.00% 8.684MiB / 3.843GiB 0.22% 648B / 0B 30.5MB / 11.5MB 2

```

**对容器CPU的限制**

```bash

┌──[root@liruilongs.github.io]-[/]

└─$ ps mo pid,psr $(pgrep cat)

┌──[root@liruilongs.github.io]-[/]

└─$ docker run -itd --name=c3 --cpuset-cpus 0 -m 200M centos

a771eed8c7c39cd410bd6f43909a67bfcf181d87fcafffe57001f17f3fdff408

```

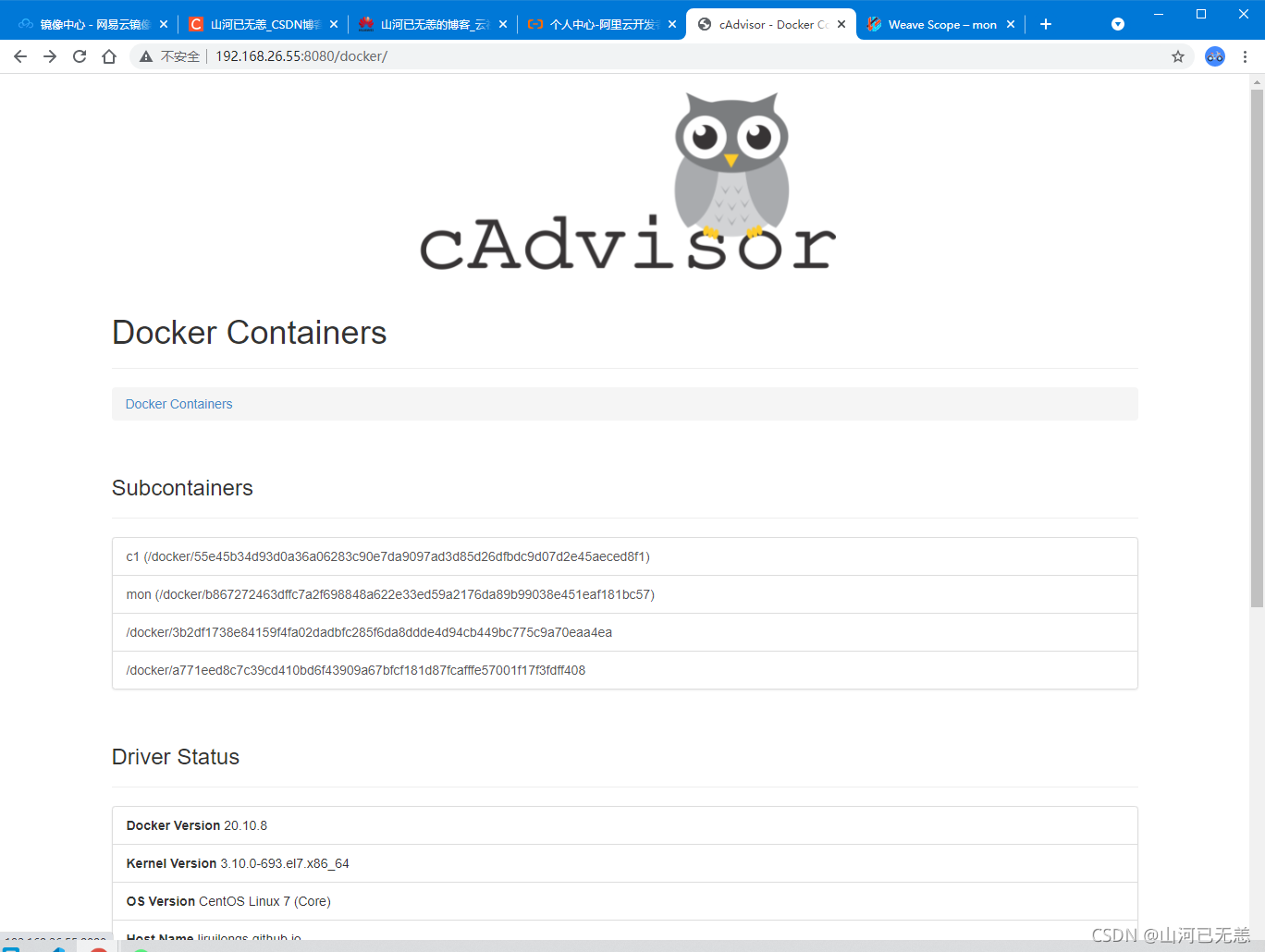

## 11.监控容器

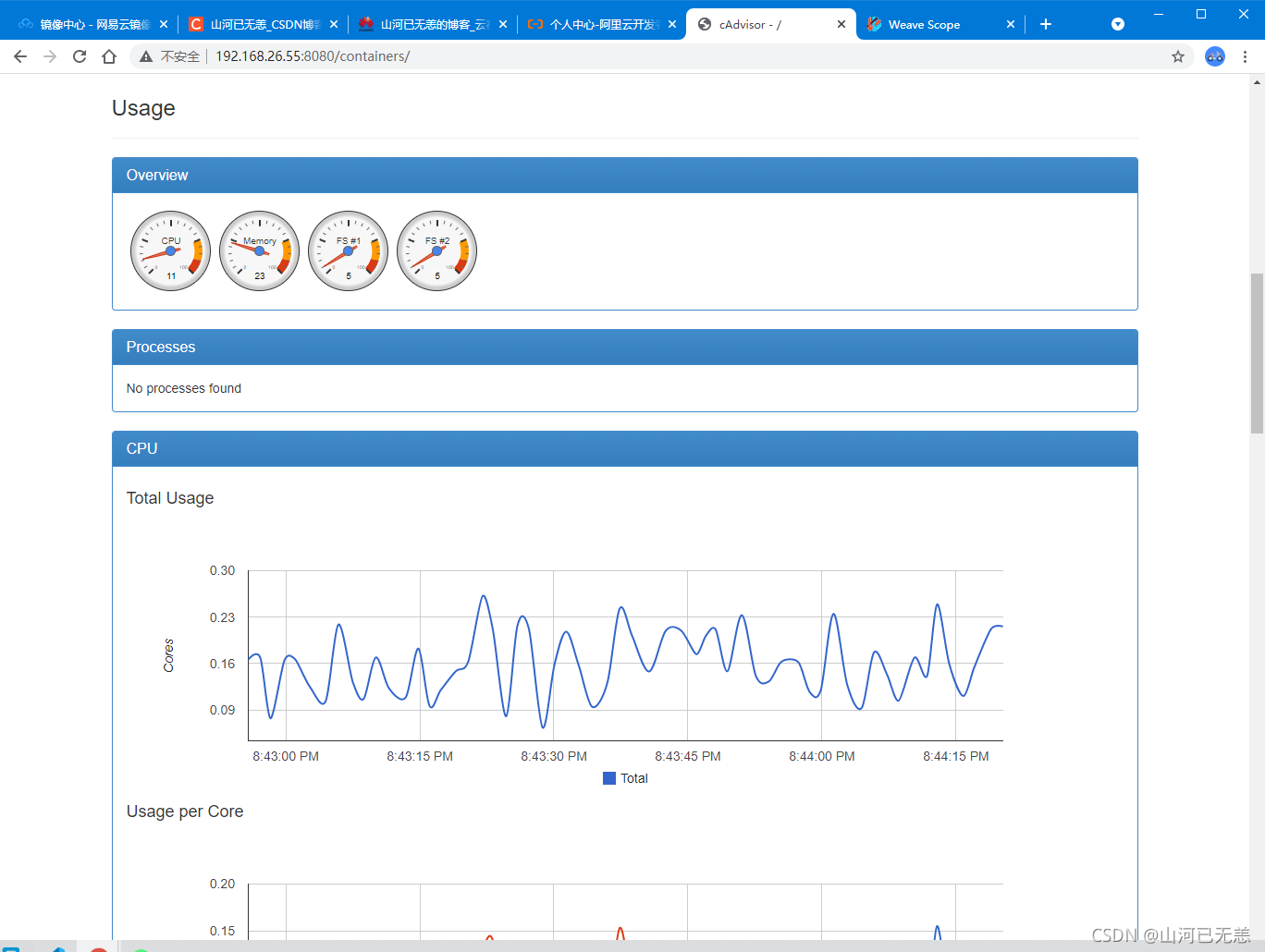

### cadvisor,读取宿主机信息

**docker pull hub.c.163.com/xbingo/cadvisor:latest**

```bash

docker run \

-v /var/run:/var/run \

-v /sys:/sys:ro \

-v /var/lib/docker:/var/lib/docker:ro \

-d -p 8080:8080 --name=mon \

hub.c.163.com/xbingo/cadvisor:latest

```

|cadvisor|

|--|

||

||

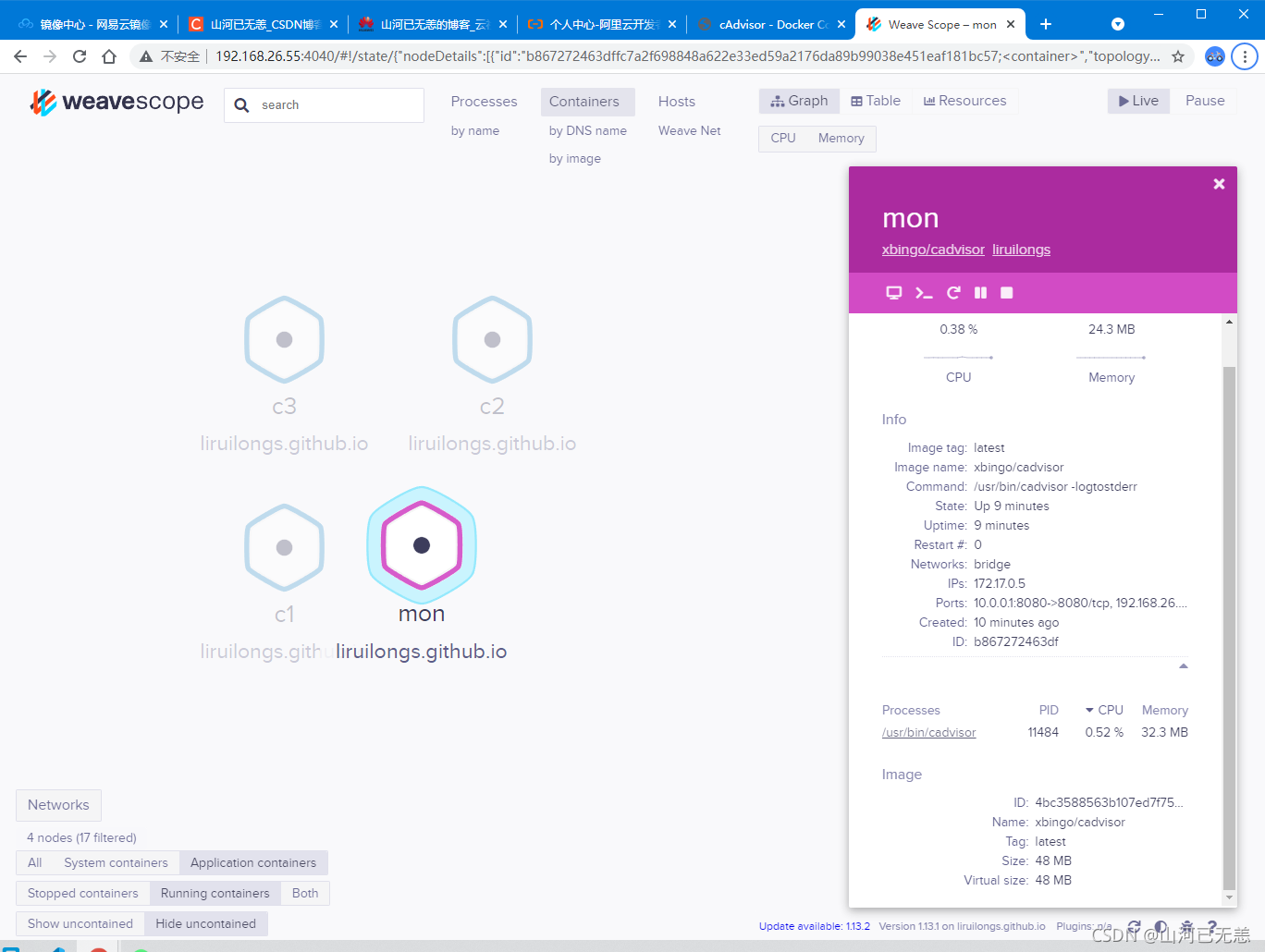

### weavescope

```bash

┌──[root@liruilongs.github.io]-[/]

└─$ chmod +x ./scope

┌──[root@liruilongs.github.io]-[/]

└─$ ./scope launch

Unable to find image 'weaveworks/scope:1.13.1' locally

1.13.1: Pulling from weaveworks/scope

c9b1b535fdd9: Pull complete

550073704c23: Pull complete

8738e5bbaf1d: Pull complete

0a8826d26027: Pull complete

387c1aa951b4: Pull complete

e72d45461bb9: Pull complete

75cc44b65e98: Pull complete

11f7584a6ade: Pull complete

a5aa3ebbe1c2: Pull complete

7cdbc028c8d2: Pull complete

Digest: sha256:4342f1c799aba244b975dcf12317eb11858f9879a3699818e2bf4c37887584dc

Status: Downloaded newer image for weaveworks/scope:1.13.1

3254bcd54a7b2b1a5ece2ca873ab18c3215484e6b4f83617a522afe4e853c378

Scope probe started

The Scope App is not responding. Consult the container logs for further details.

┌──[root@liruilongs.github.io]-[/]

└─$

```

|weavescope|

|--|

||

# 二、kubernetes安装

## 1.ansible配置

这里我们用ansible来安装

1. 配置控制机到受控机的ssh免密

2. 配置 ansible配置文件,主机清单

```bash

[root@vms81 ~]# ls

anaconda-ks.cfg calico_3_14.tar calico.yaml one-client-install.sh set.sh

[root@vms81 ~]# mkdir ansible

[root@vms81 ~]# cd ansible/

[root@vms81 ansible]# ls

[root@vms81 ansible]# vim ansible.cfg

[root@vms81 ansible]# cat ansible.cfg

[defaults]

# 主机清单文件,就是要控制的主机列表

inventory=inventory

# 连接受管机器的远程的用户名

remote_user=root

# 角色目录

roles_path=roles

# 设置用户的su 提权

[privilege_escalation]

become=True

become_method=sudo

become_user=root

become_ask_pass=False

[root@vms81 ansible]# vim inventory

[root@vms81 ansible]# cat inventory

[node]

192.168.26.82

192.168.26.83

[master]

192.168.26.81

[root@vms81 ansible]#

```

```bash

[root@vms81 ansible]# ansible all --list-hosts

hosts (3):

192.168.26.82

192.168.26.83

192.168.26.81

[root@vms81 ansible]# ansible all -m ping

192.168.26.81 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.26.83 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.26.82 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

[root@vms81 ansible]#

```

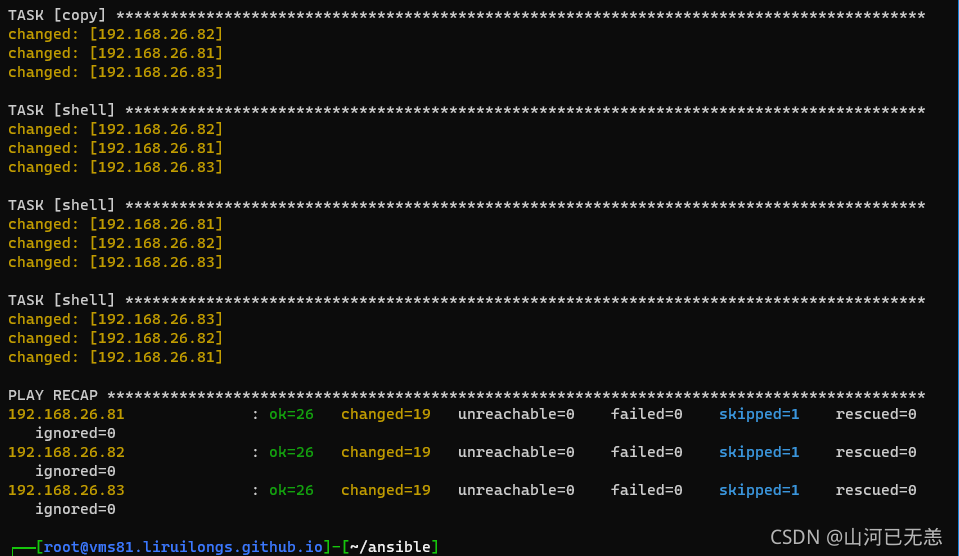

## 2.所有节点操作

|所有节点操作|

|--|

|关闭防火墙,selinux,设置hosts|

|关闭swap|

|设置yum源|

|安装docker-ce,导入缺少的镜像|

|设置参数|

|安装相关软件包|

```bash

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$vim init_k8s_playbook.yml

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ls

ansible.cfg init_k8s_playbook.yml inventory

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$vim daemon.json

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat daemon.json

{

"registry-mirrors": ["https://2tefyfv7.mirror.aliyuncs.com"]

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$vim hosts

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$car hosts

-bash: car: command not found

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.26.81 vms81.liruilongs.github.io vms81

192.168.26.82 vms82.liruilongs.github.io vms82

192.168.26.83 vms83.liruilongs.github.io vms83

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$vim k8s.conf

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat init_k8s_playbook.yml

- name: init k8s

hosts: all

tasks:

# 关闭防火墙

- shell: firewall-cmd --set-default-zone=trusted

# 关闭selinux

- shell: getenforce

register: out

- debug: msg="{{out}}"

- shell: setenforce 0

when: out.stdout != "Disabled"

- replace:

path: /etc/selinux/config

regexp: "SELINUX=enforcing"

replace: "SELINUX=disabled"

- shell: cat /etc/selinux/config

register: out

- debug: msg="{{out}}"

- copy:

src: ./hosts

dest: /etc/hosts

force: yes

# 关闭交换分区

- shell: swapoff -a

- shell: sed -i '/swap/d' /etc/fstab

- shell: cat /etc/fstab

register: out

- debug: msg="{{out}}"

# 配置yum源

- shell: tar -cvf /etc/yum.tar /etc/yum.repos.d/

- shell: rm -rf /etc/yum.repos.d/*

- shell: wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

# 安装docker-ce

- yum:

name: docker-ce

state: present

# 配置docker加速

- shell: mkdir /etc/docker

- copy:

src: ./daemon.json

dest: /etc/docker/daemon.json

- shell: systemctl daemon-reload

- shell: systemctl restart docker

# 配置属性,安装k8s相关包

- copy:

src: ./k8s.conf

dest: /etc/sysctl.d/k8s.conf

- shell: yum install -y kubelet-1.21.1-0 kubeadm-1.21.1-0 kubectl-1.21.1-0 --disableexcludes=kubernetes

# 缺少镜像导入

- copy:

src: ./coredns-1.21.tar

dest: /root/coredns-1.21.tar

- shell: docker load -i /root/coredns-1.21.tar

# 启动服务

- shell: systemctl restart kubelet

- shell: systemctl enable kubelet

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ls

ansible.cfg coredns-1.21.tar daemon.json hosts init_k8s_playbook.yml inventory k8s.conf

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

```

|结果|

|--|

||

**init_k8s_playbook.yml**

```yml

- name: init k8s

hosts: all

tasks:

# 关闭防火墙

- shell: firewall-cmd --set-default-zone=trusted

# 关闭selinux

- shell: getenforce

register: out

- debug: msg="{{out}}"

- shell: setenforce 0

when: out.stdout != "Disabled"

- replace:

path: /etc/selinux/config

regexp: "SELINUX=enforcing"

replace: "SELINUX=disabled"

- shell: cat /etc/selinux/config

register: out

- debug: msg="{{out}}"

- copy:

src: ./hosts

dest: /etc/hosts

force: yes

# 关闭交换分区

- shell: swapoff -a

- shell: sed -i '/swap/d' /etc/fstab

- shell: cat /etc/fstab

register: out

- debug: msg="{{out}}"

# 配置yum源

- shell: tar -cvf /etc/yum.tar /etc/yum.repos.d/

- shell: rm -rf /etc/yum.repos.d/*

- shell: wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

# 安装docker-ce

- yum:

name: docker-ce

state: present

# 配置docker加速

- shell: mkdir /etc/docker

- copy:

src: ./daemon.json

dest: /etc/docker/daemon.json

- shell: systemctl daemon-reload

- shell: systemctl restart docker

- shell: systemctl enable docker --now

# 配置属性,安装k8s相关包

- copy:

src: ./k8s.conf

dest: /etc/sysctl.d/k8s.conf

- shell: yum install -y kubelet-1.21.1-0 kubeadm-1.21.1-0 kubectl-1.21.1-0 --disableexcludes=kubernetes

# 缺少镜像导入

- copy:

src: ./coredns-1.21.tar

dest: /root/coredns-1.21.tar

- shell: docker load -i /root/coredns-1.21.tar

# 启动服务

- shell: systemctl restart kubelet

- shell: systemctl enable kubelet

```

**检查一下**

```bash

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible all -m shell -a "docker images"

192.168.26.83 | CHANGED | rc=0 >>

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 11 months ago 42.5MB

192.168.26.81 | CHANGED | rc=0 >>

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 11 months ago 42.5MB

192.168.26.82 | CHANGED | rc=0 >>

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 11 months ago 42.5MB

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$

```

## 3.master和node节点操作

```bash

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible master -m shell -a "kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.21.1 --pod-network-cidr=10.244.0.0/16"

192.168.26.81 | CHANGED | rc=0 >>

[init] Using Kubernetes version: v1.21.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local vms81.liruilongs.github.io] and IPs [10.96.0.1 192.168.26.81]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost vms81.liruilongs.github.io] and IPs [192.168.26.81 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost vms81.liruilongs.github.io] and IPs [192.168.26.81 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 23.005092 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node vms81.liruilongs.github.io as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node vms81.liruilongs.github.io as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 8e0tvh.1n0oqtp4lzwauqh0

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/